Contents

An All-Around Better Horse

AI and the Revolution in Design, Engineering, and Problem-Solving Methodology

This illustrated essay invites you to imagine how we can create a more sustainable, creative, and livable world by applying the transformative power of AI to design, engineering, and everyday problem-solving. It examines how reimagining design and engineering processes can empower both novices and experts to bring ambitious ideas to life.

For the past 15 years, I’ve worked on creating tools that connect AI research to real-world applications with the goal of making design and engineering more accessible and impactful. This essay draws on those experiences to envision how AI can shape the future of our tools and the built systems around us. Starting with a broad vision and foundational premises, it then focuses on specific interaction mechanisms, optimization opportunities, industry implications, and areas where AI can have a significant impact through the orchestration of design and engineering pipelines.

Whether you’re a researcher, designer, engineer, or simply curious about the future of the built world, I invite you to join me in this exploration.

- Introduction

-

Problem Decomposition

Break down complex problems and navigate underlying decisions in more intuitive and efficient ways.

-

Just-in-Time Functionality

Create the perfect interface, feature, or workflow for the task at hand.

-

Holistic Involvement

Support every aspect of a project, solve problems across disciplines, and connect the dots.

-

Industry Transformation

Open the door to bold new opportunities without leaving existing users and methodologies behind.

-

Real World Impact

Create a better world by using powerful technologies to address both everyday and existential challenges.

-

Call to Action

An invitation to create meaningful change together.

If I had asked people what they wanted,

they would have said faster horses.

Knowing what to want is a skill. It requires a systematic approach to defining goals, evaluating options, analyzing available data and assessing potential outcomes. Above all, it requires the audacity to imagine that things could be different, that an existing need could be met in a better way, or that something entirely new could emerge, transforming how we live, work, or understand the world.

It’s impossible to keep up with the latest developments across every field, so we rely on a kind of innovation republic, where domain experts and visionaries like Henry Ford and Steve Jobs represent our interests by recognizing the transformative potential of new technologies and shaping them into impactful products.

AI is enabling a shift towards something more like a direct democracy of innovation, where individuals can bypass traditional gatekeepers to create solutions for themselves.

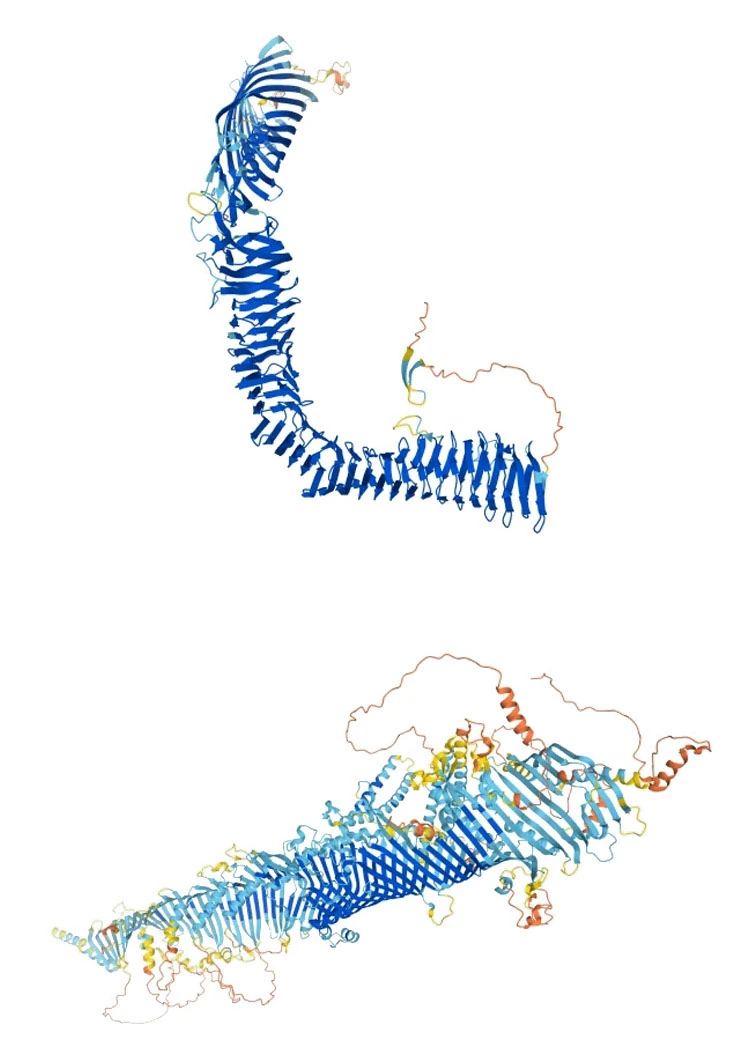

Over the last few years, we have seen the beginnings of the revolution in AI-driven scientific discovery. DeepMind’s Nobel Prize-winning protein structure prediction system, AlphaFold, and tools like Sakana AI’s AI Scientist highlight how AI can enable foundational breakthroughs.

These discoveries may lay the groundwork, but they do not directly constitute the downstream solutions needed to address real-world problems. To bridge this gap, it is essential to augment the methodologies of both foundational sciences and applied fields like functional design and engineering, where AI-driven innovation can help to tackle humanity's toughest challenges and improve everyday life.

Outcomes in design and engineering work can be enhanced by the advanced reasoning, holistic planning, and deep technical knowledge present in agentic AI systems. However, for AI to select real-world problems that matter to humans and solve them in ways that align with our sensibilities, it stands to reason that human participation of some kind is needed.

Human contributions to this work will inevitably evolve and take many forms, from direct collaboration with AI to indirect influence on its behavior, with participation ranging from hands-on tool use and intent expressions to passive guidance by individuals, groups, and even the broader public.

Tools of this kind will enable the development of more efficient, sustainable, and inspiring products and buildings. They can also supplement the work of organizations like the Peace Corps, the International Red Cross, and the U.S. Army Corps of Engineers, while directly empowering communities and individuals to tackle challenging problems.

The full realization of this future will require significant technical advancement, a re-envisioning of design and engineering software, and a reconsideration of fundamental assumptions, such as what constitutes a "user."

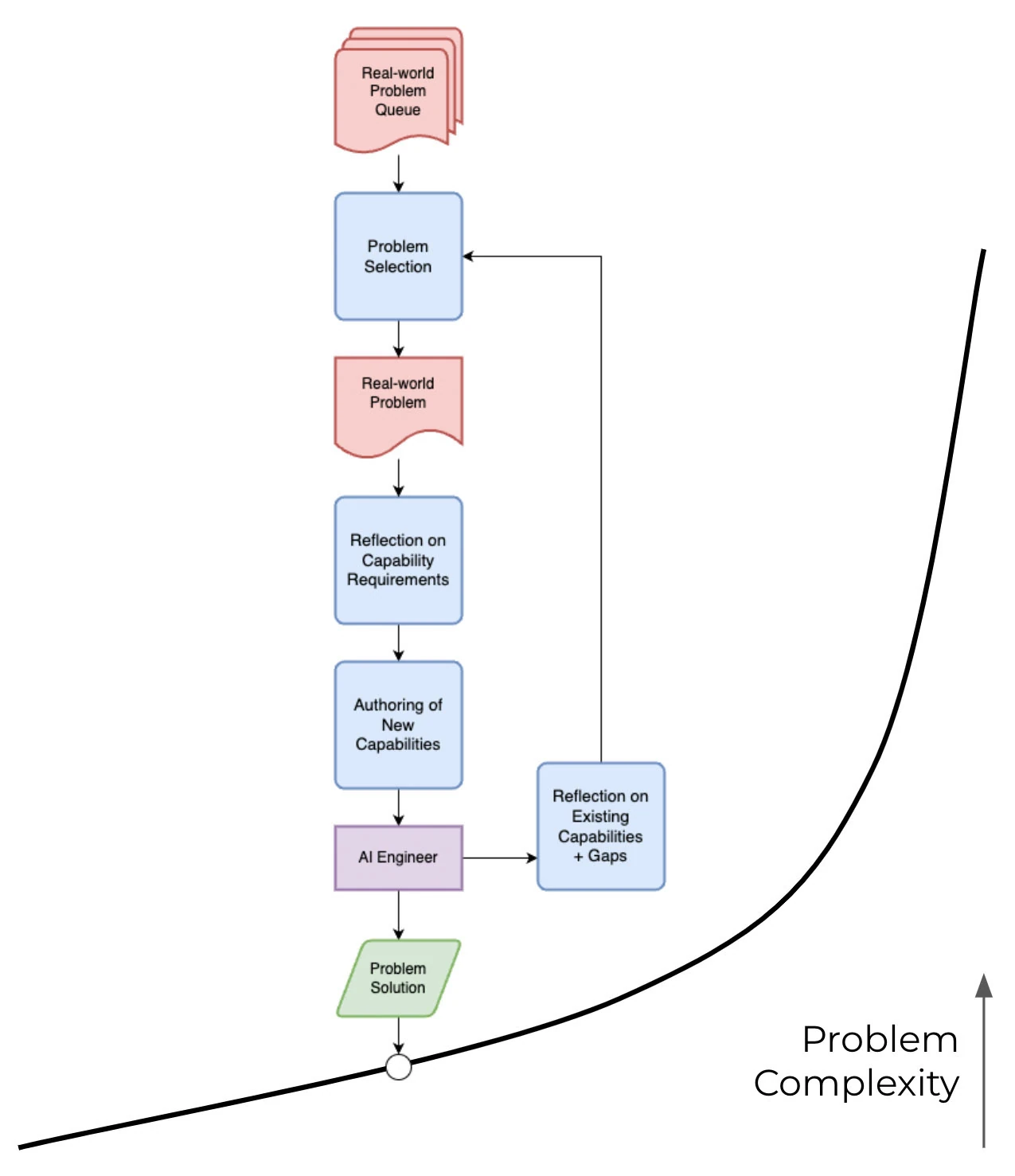

Importantly, we do not need to wait for AGI to get started. By taking a scaffolding approach that pairs problem selection with the iterative extension of capabilities, we can tackle progressively harder problems and steadily increase the system’s real-world impact.

Professional tools are hard to learn. But when a novice struggles with an application such as AutoCAD or Photoshop, it is quite likely that they are as much impeded by their knowledge of the design discipline itself as they are by their knowledge of the tool.

Take a seasoned darkroom photographer with no digital experience, for example. Their ability to identify why an image “looks bad,” determine a sequence of darkroom operations to improve it, and translate those operations into the vocabulary and functions of a digital tool by hunting around the menu system would likely surpass a non-photographer’s capacity to achieve the same results.

In some respects, the ability to decompose problems is a general-purpose skill. Experience breaking down complex problems in one field should provide at least some benefit when reasoning about problems in a completely different field.

However, familiarity with the underlying concepts, techniques, and vocabulary of a particular discipline is essential for effective problem-solving. While general problem-solving skills provide the framework, domain knowledge is needed to ground solutions in the specific constraints and characteristics of the field.

In this sense, AI co-pilots have limited potential to make professional tools and problem domains more accessible to novice users. They assume an already decomposed problem and primarily assist with the mapping of domain-relevant terms to the function names and parameters of the host tool. The real challenge lies not in the user's ability to directly evoke specific features but in their understanding of how to map the problem onto those features.

Innovation in LLM-based reasoning techniques, memory, and task orchestration has led to significantly more capable agent systems that can perform tasks like conduct financial analyses, build web applications, or design computer chips.

Numerous agent architectures have already emerged and countless articles provide detailed overviews of their designs, operational principles, merits, and limitations. I will therefore forgo a broad overview here but will later discuss specific architectural ideas relevant to the opportunities explored in this essay.

In addition to making design and engineering accessible to novices, agentic systems have the potential to push the boundaries of these disciplines beyond what professionals could achieve on their own.

When humans solve problems, we cannot avoid historical precedent. Even the rejection of an established convention (e.g. a three-wheeled car) is a reaction to it. We cannot help it – we live in the world and are surrounded by those earlier solutions.

In many ways, this is a good thing. We learn from the successes and failures of the past and build upon the foundations of distilled knowledge, enabling us to build ever higher instead of always starting from scratch.

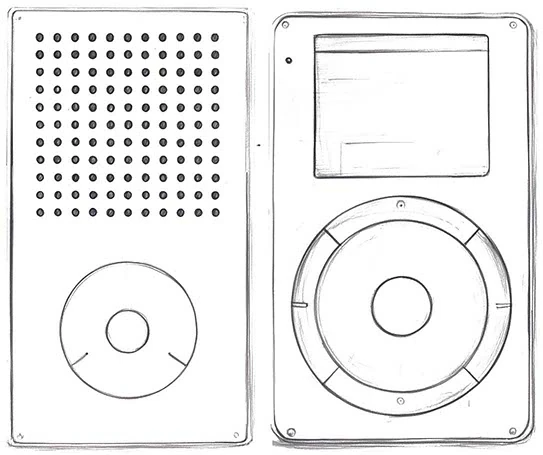

Braun’s T3 Pocket Radio and Apple’s iPod

This dependence on history also presents challenges for the advancement of fields in math, science, engineering and design. Our awareness of history often prevents us from seeing beyond outdated limitations and suboptimal strategies.

In addition to our historical biases, human-driven innovation is naturally also constrained by our mental capacity. The challenge of juggling numerous interconnected components, grappling with conceptually intricate ideas, attempting to master knowledge across a range of fields, and the temporal constraints of our waking hours all limit our potential with respect to conceptualizing and building complex systems.

Like humans, contemporary LLMs rely on human-generated knowledge, limiting their ability to generate truly novel solutions beyond the patterns present in their training data. Unlike humans, however, LLMs lack a direct mechanism for independent experimentation, which limits their ability to extend their knowledge.

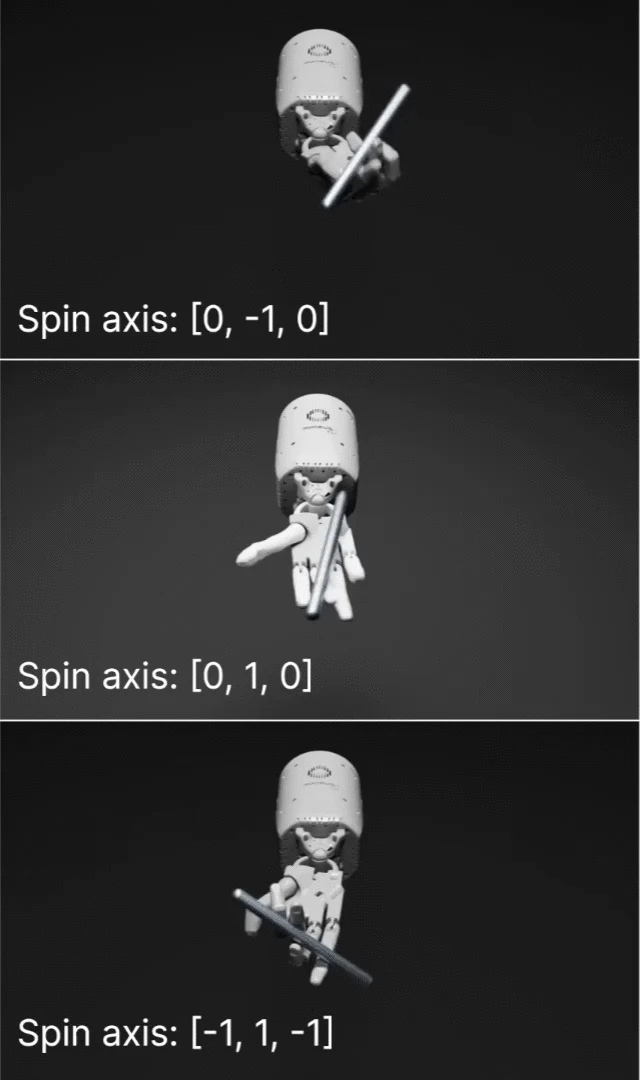

Reinforcement Learning (RL), on the other hand, provides a powerful mechanism for generating novel solutions through structured experimentation, enabling it to bypass both historical and intellectual constraints associated with human and LLM capabilities. RL agents learn through trial and error, interacting with an environment and receiving rewards or penalties based on their actions. This process allows them to discover effective strategies without relying on pre-existing human knowledge.

Despite its potential for novel invention, RL has been limited in practice by the significant human effort required to design effective reward functions and experimentation environments, restricting its application to carefully designed problem spaces.

Recent advances have demonstrated the use of LLMs in automating these RL configuration tasks. By supporting the creation of reward functions, experiment design, and other essential components, LLMs can facilitate wider and more effective RL deployment, enabling the combined systems to address problems that were previously out of reach.

Nvidia's Eureka uses LLMs to design reward functions for robotic learning

If an AI system were to develop a timely cure for a horrible disease, society would rejoice and take advantage of the machine’s achievement regardless of whether we understood the solution’s underlying principles.

Of course, it would be beneficial for future medical research to be able to incorporate those ideas into the academic literature. But, if this were not possible, we could perhaps console ourselves with the notion that as long as science can continue to advance and achieve our goals in this way, we should have no practical reason to require continued human comprehension.

Yet, if we were not able to comprehend the solution or its underlying principles, it is doubtful that we would be able to continue to articulate meaningful goals or assess whether they were being achieved.

DeepMind’s AlphaFold

Though an overarching goal like curing a horrible disease is easy to understand and universally desirable, reasoning through the underlying considerations can be more difficult. It may be challenging to determine how each technical consideration maps onto the broader goal and key strategic or ethical touchpoints. Some problem domains may be so abstract or intricate that they are challenging to grasp even at their most basic level.

Worthwhile goals don’t arise in a vacuum – they build upon a foundational understanding of a discipline’s underlying concepts and techniques, as well as knowledge of what is possible, what has been tried, and which constraints are absolute. Lacking this foundational knowledge limits not only our ability to solve problems but also our ability to conceptualize novel or meaningful goals. As Bob Dylan noted, “to live outside the law, you must be honest.” Without grounding in the fundamentals of a field, we risk losing the ability to reason through problems, contribute to innovation, and act as responsible stewards of the future.

Therefore, it is not enough for the tool to merely decompose the user’s goals. It must also help the user develop an understanding of the domain and how component decisions impact their overall objectives.

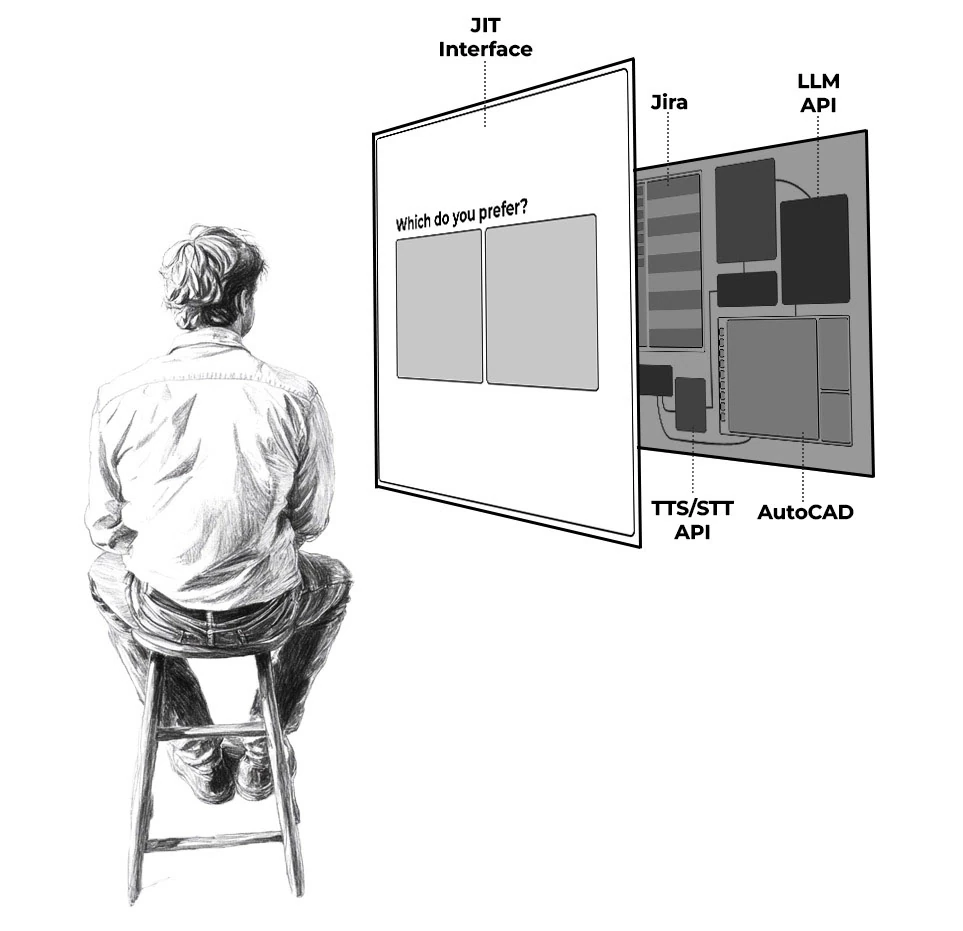

This could include just-in-time curricula or interactive explainers that focus on the specific concepts and skills relevant to the task. Of course, expecting users to acquire deep expertise equivalent to a PhD before weighing in on decisions is neither practical nor democratizing.

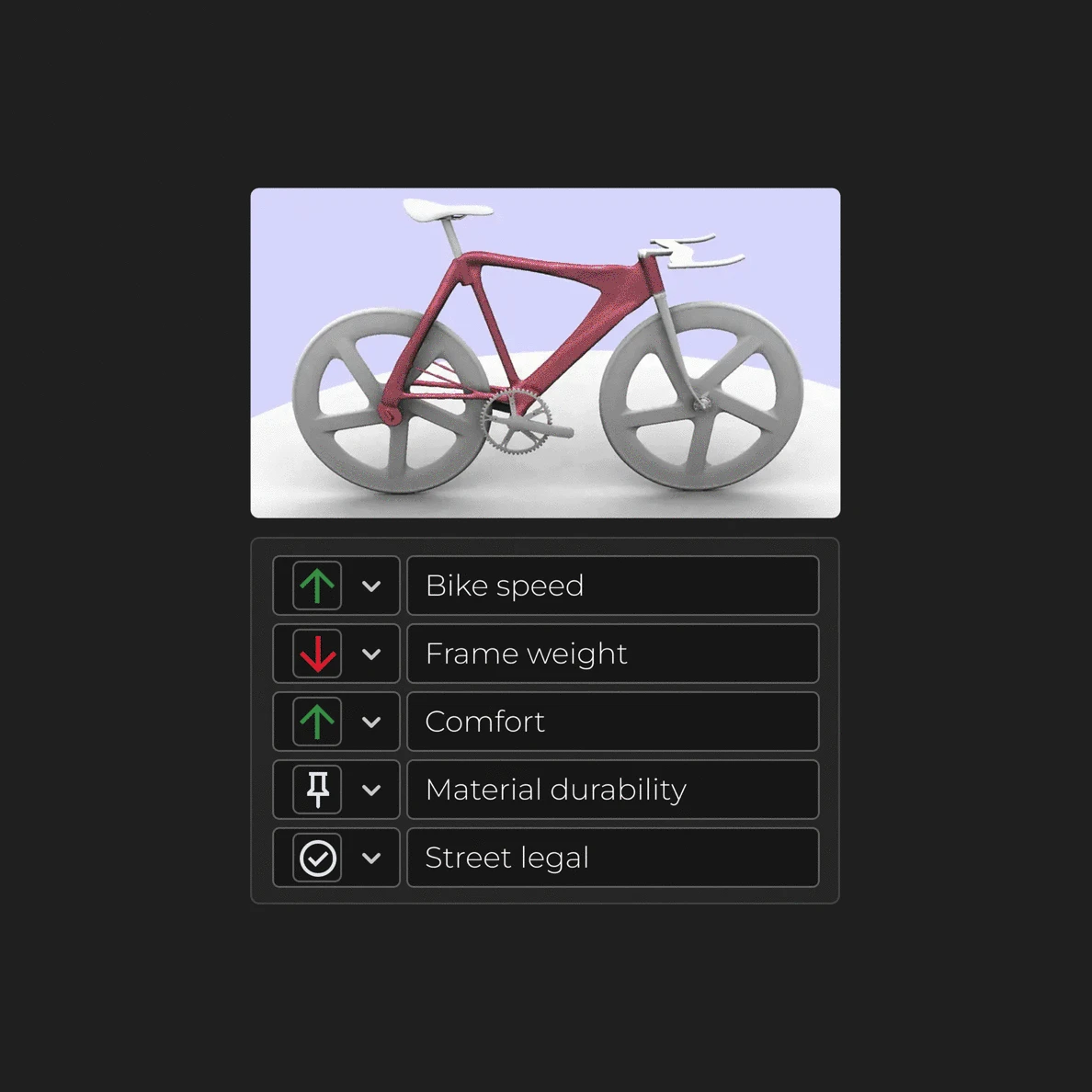

A combination of short-form just-in-time courses, along with in-context interactive mechanisms that enable users to directly control aspects of the design while also helping to elucidate the underlying principles and their impact, provides a more practical alternative. Rooted in Constructivist principles, this approach integrates education into the interactions themselves, allowing users to deepen their understanding while making progress toward their goals.

Navigating a set of interrelated decisions is a skill in its own right. When the domain is complex or unfamiliar, this process can be especially daunting. The feeling of not knowing where to begin or what to do next is dispiriting and can turn people away from their work. Creative tools should do more to aid users in navigating the creative process.

While there is no singular formula for creative work, a common pattern across both the arts and sciences is to utilize a loosely structured process of alternately outlining and refining the outcome.

Think of drawing a portrait. If the artist focuses on perfecting the nose before considering the rest of the subject, the proportions are likely to be off and the overall composition may not leave adequate space for all the necessary elements. Instead, the artist typically starts by sketching faint outlines to position major landmarks, then shifts their attention back and forth between the overall composition and individual areas – adjusting spatial relationships and layering detail as they go.

While the specifics differ from one discipline to the next, this iterative methodology is common across creative fields. However, engaging in this process can be very demanding of one’s attention and energy. If users cannot navigate it with comfort and confidence, they will likely grow weary of their work.

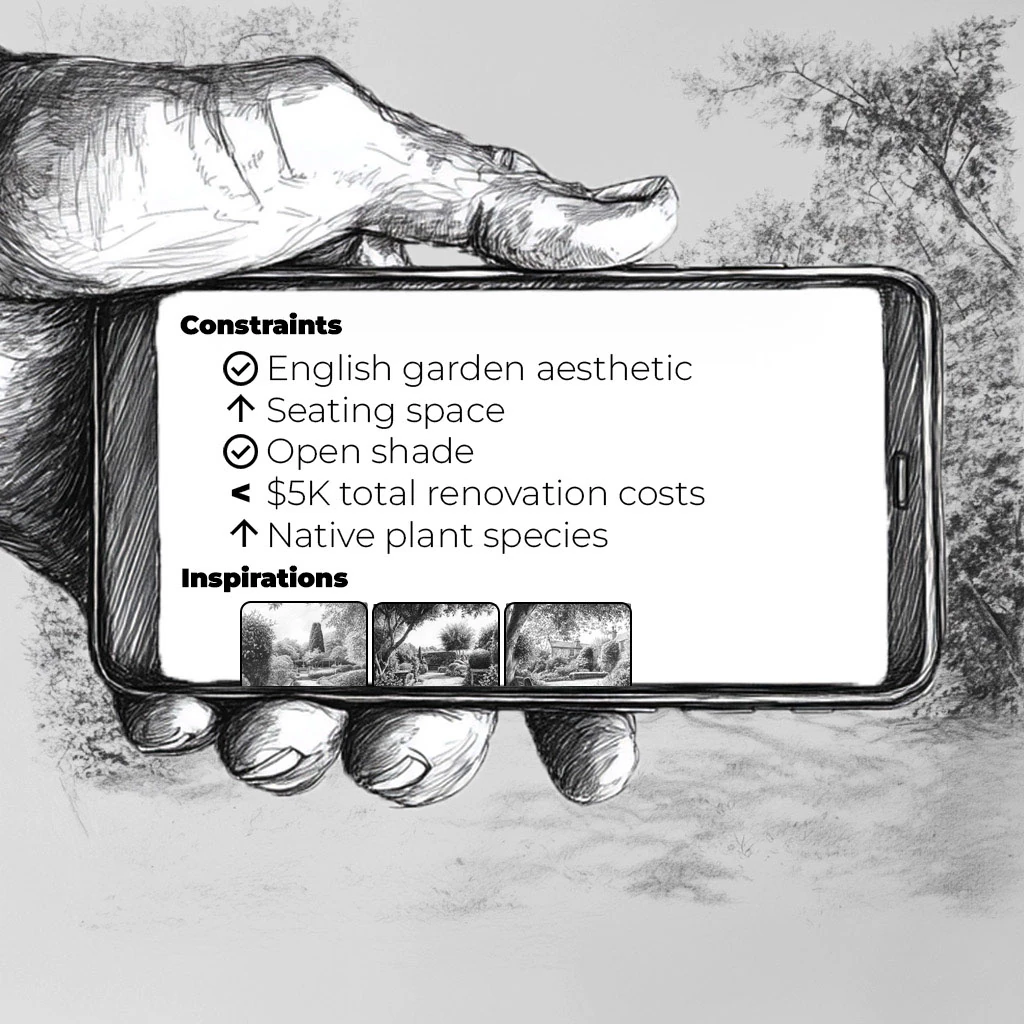

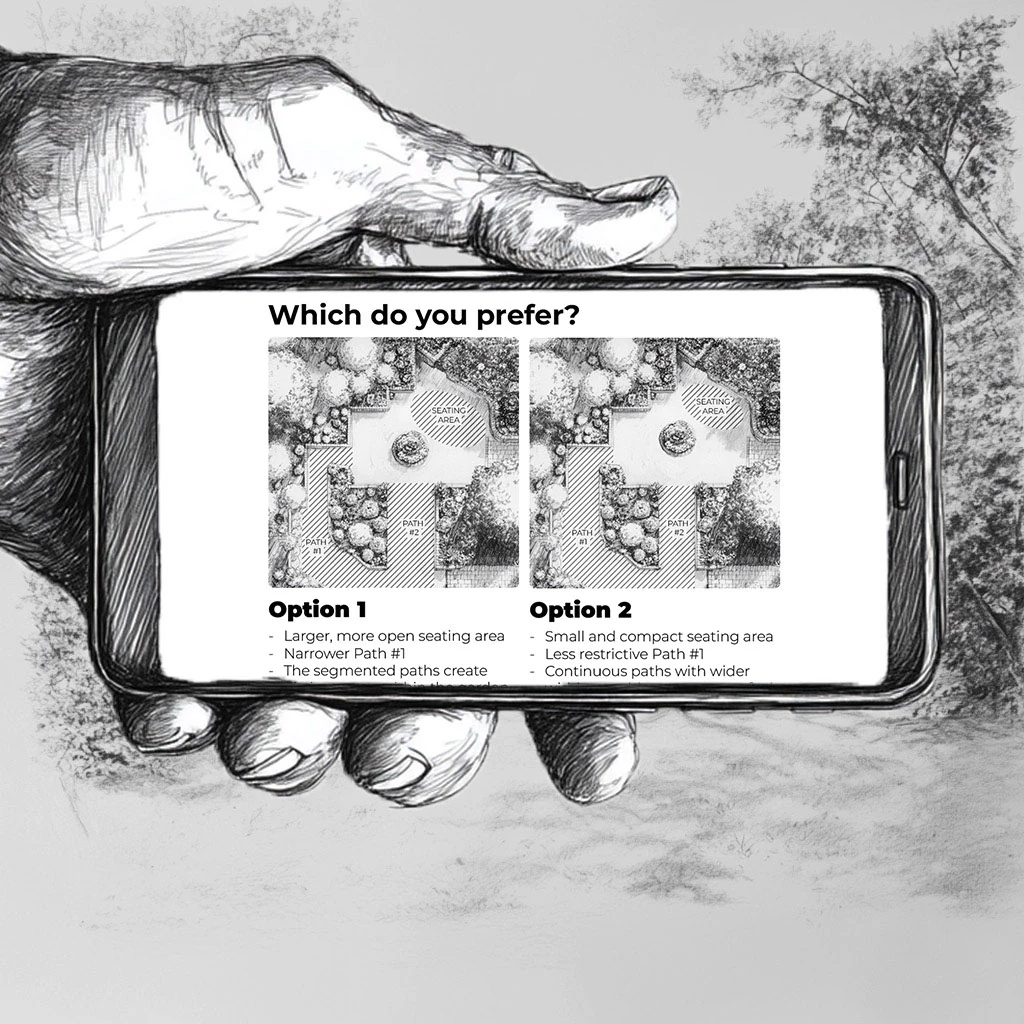

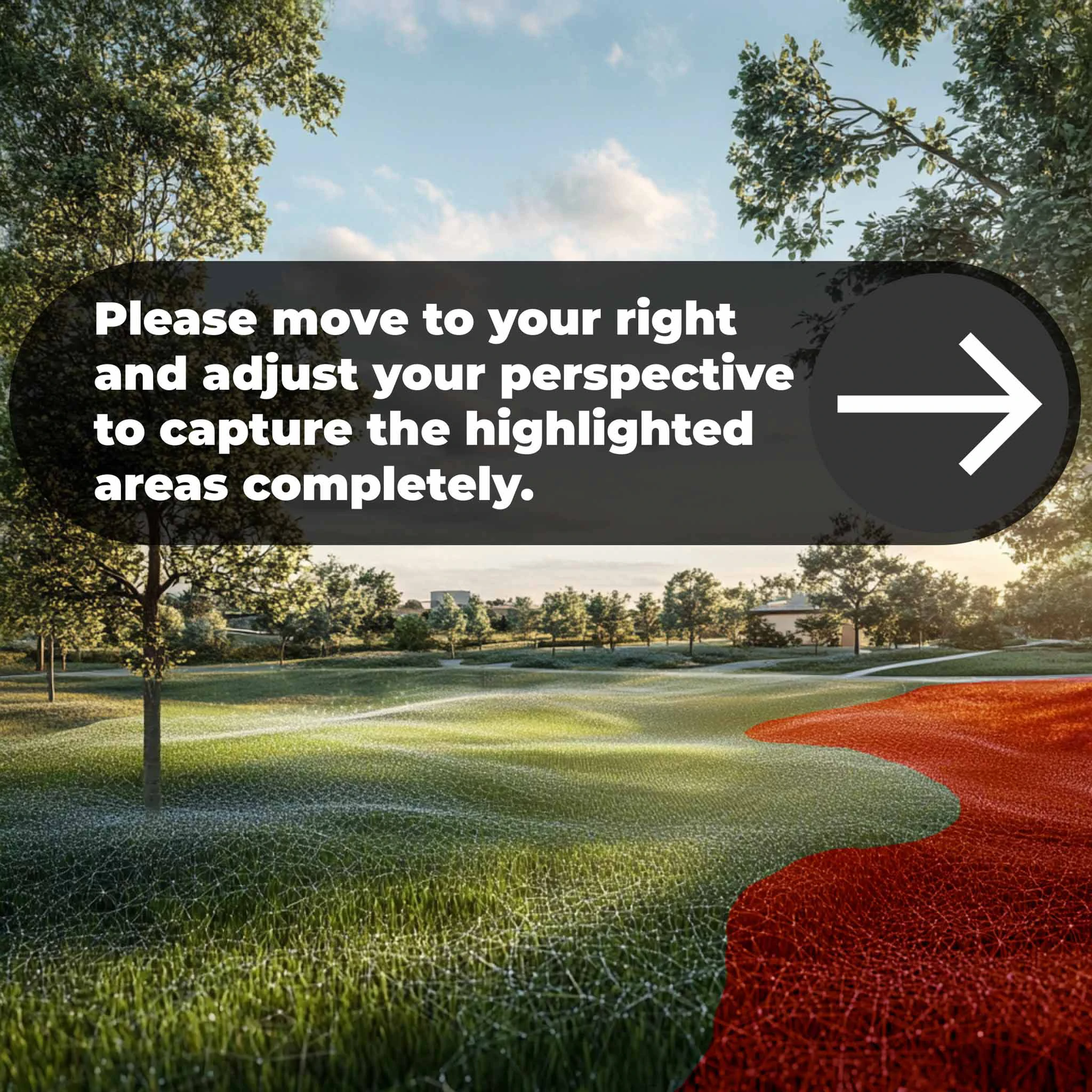

Creative tools can provide direct assistance in navigating this iterative decision-making process. They can help focus the user on a specific task and get his or her input on key issues through guided workflows that dynamically shift the application’s visual focus to specific regions of the design, present purpose-built interfaces to solicit input on individual decisions, and provide relevant information and visualizations to inform decision making.

These guided workflows and proactive mechanisms will enable users to engage more intuitively and confidently with their work, empowering them to articulate ideas, solve problems, and navigate the decision-making process in a more dynamic and enjoyable way.

AI offers transformative potential in what we can achieve individually and as a society. To actualize this potential, we cannot keep AI at the margins of our workflows or merely retrofit it into isolated features of conventional tools. We need to integrate it deeply into the substantive elements – the core processes that drive innovation and discovery in design, engineering and science.

This requires a fundamental reimagining of the tool, from its interaction mechanisms all the way down to its most fundamental assumptions.

Traditional workflows are no longer practical, and simple chatbot-style text inputs fall short for deep human-AI collaboration. Next-generation tools need a unified software and experience architecture that approaches problem-solving in an AI-native way rather than retrofitting new capabilities into existing mechanisms that were designed for manual, linear workflows.

In the next section, we will explore how just-in-time interfaces and functionality, enabled by LLM-driven code generation, will shape the next generation of creative tools and leverage the respective strengths of humans and AI to help us do more, think bigger, and create a better world.

Legacy professional tools tend to rely on static workflows and a labyrinth of menus that provide access to a wide range of granular features. While these features can ultimately address a broad assortment of needs, the abundance of options tends to overwhelm new users and can also hinder experienced ones.

These tools are a product of their time. Without the ability to dynamically adapt functionality, toolmakers had to anticipate user needs and define workflows in advance through market and customer research.

With the advent of AI, a new approach becomes possible.

A one-size-fits-all tool does not fit anyone’s hand perfectly. By trying to accommodate everyone, it ends up falling short of meeting any particular individual’s exact needs.

Purpose-built interfaces can help users focus on specific tasks, adapting fluidly to their needs and ways of working in real time. Interfaces of this kind will enable users to engage with creative work on their own terms, going beyond the toolmaker’s preconceived workflows to achieve novel outcomes.

By employing techniques like intent decomposition, preference learning, and code generation, we can create tools that align with both the task and the user’s way of thinking. This approach not only facilitates decision-making but also empowers users to explore new ideas and unlock creative possibilities that rigid, predefined workflows would be unlikely to reach.

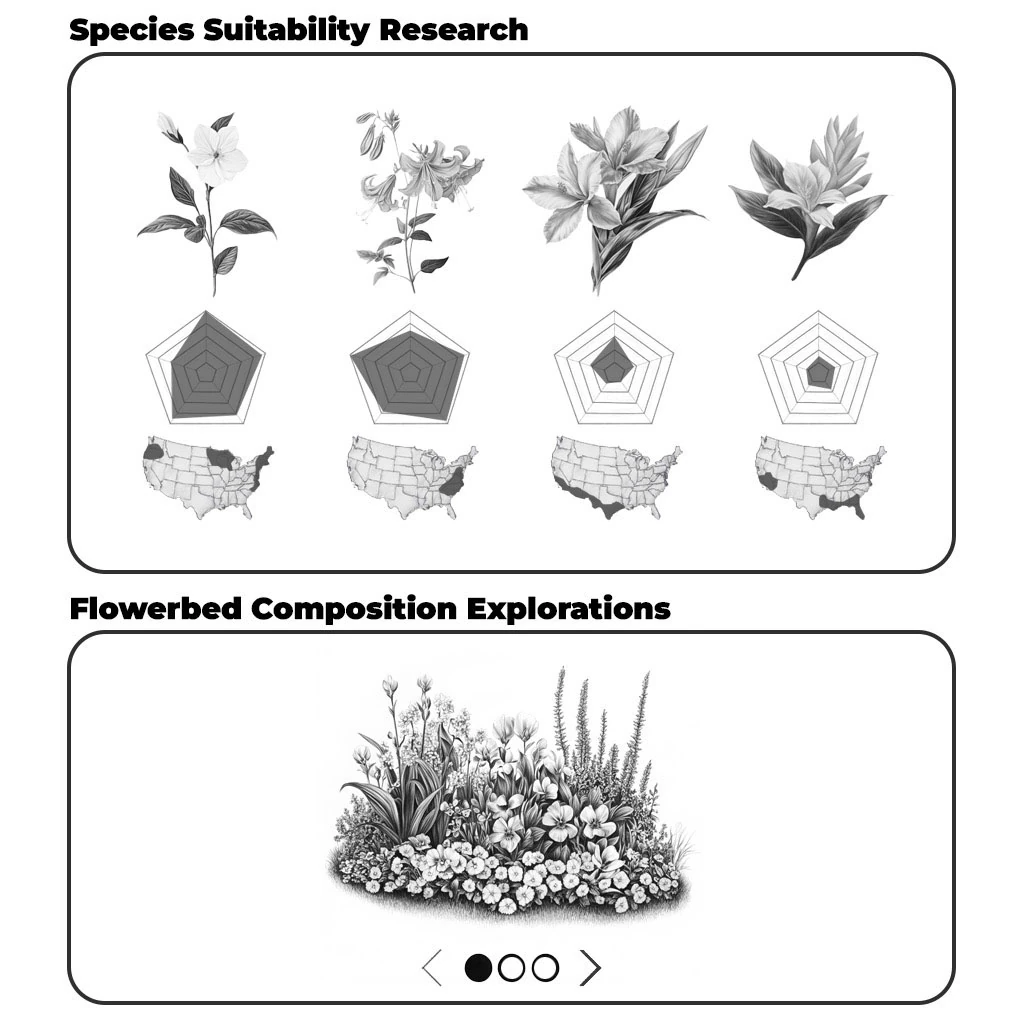

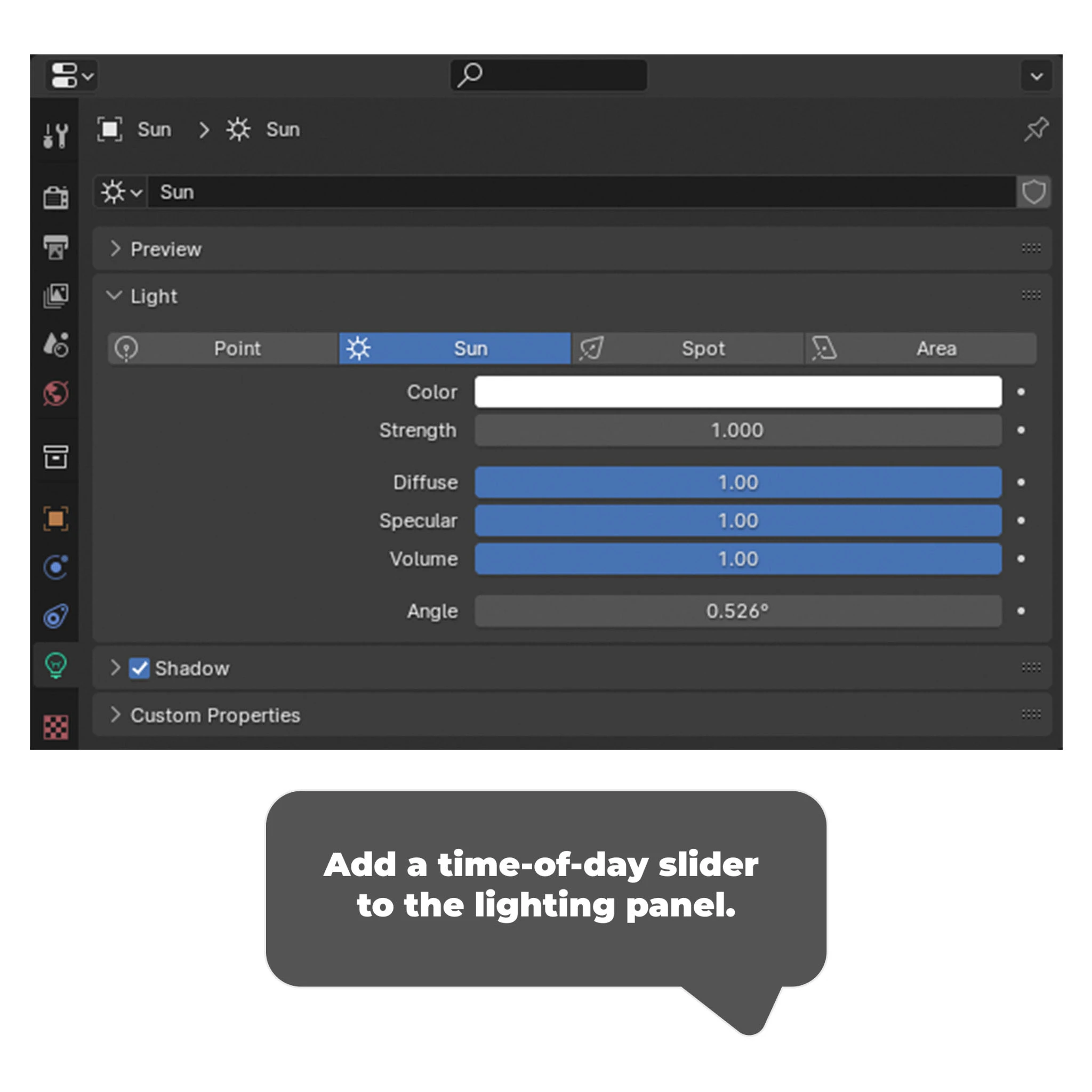

In addition to providing task-specific control mechanisms, just-in-time interfaces can transform how information is presented to users. Well-designed layouts, charts and visualizations conceived around the nature of the data as well as the user’s literacies and learning style will help to make complex information more digestible. By delivering the right information at the right time, just-in-time interfaces will support informed decisions and enable users to achieve better outcomes.

Camera moves within an application’s interface can be employed to focus the user’s attention on specific issues or points of interest. Whether highlighting a particular object in a 3D environment or an intriguing data point in a spreadsheet, these guided movements foster a shared perspective between the user and AI agent.

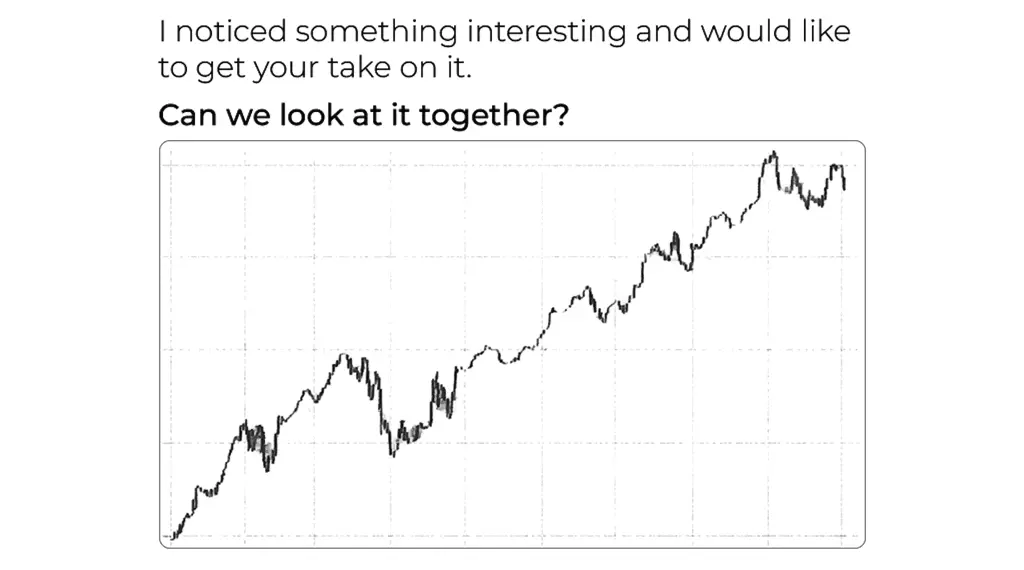

A cinematic zoom on a key finding

Much like a cinematic dolly move that brings a critical detail into focus, viewport cinematography can help draw the user’s attention to areas that require further consideration. Users can also direct the agent’s focus, creating a collaborative mechanism that improves decision-making and enhances the efficiency, immersiveness, and usability of the software.

Code generation, in combination with function-calling and computer-use, can also be leveraged to dynamically create new under-the-hood capabilities as well as seamlessly combine features from existing APIs and GUI-driven applications.

This enables the system to draw upon both advanced custom functionality and powerful, hard-to-build systems, such as CAD and simulation engines, without requiring that the user be cognizant of the underlying details of each component.

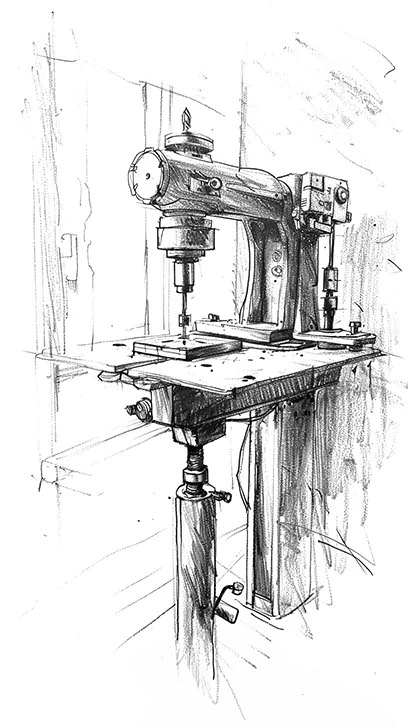

In woodworking, specialized or custom-built guides called jigs are used to streamline repetitive tasks, simplify workflows, and ensure consistent results. Digital tools can adopt this concept by allowing users to customize interfaces and workflows with the same interaction mechanisms they use to transform content.

A jig inspired by a visual reference

Unlike traditional plug-ins, which typically require coding expertise to develop, these purpose-built harnesses can be created directly within the system’s existing interaction model. This lets users seamlessly shift between developing their project and refining the tools they use, without needing additional technical skills. By blurring the distinction between making and using software, the user can organically tune both the tool and the outcome to meet their needs, leading to an evermore fruitful experience.

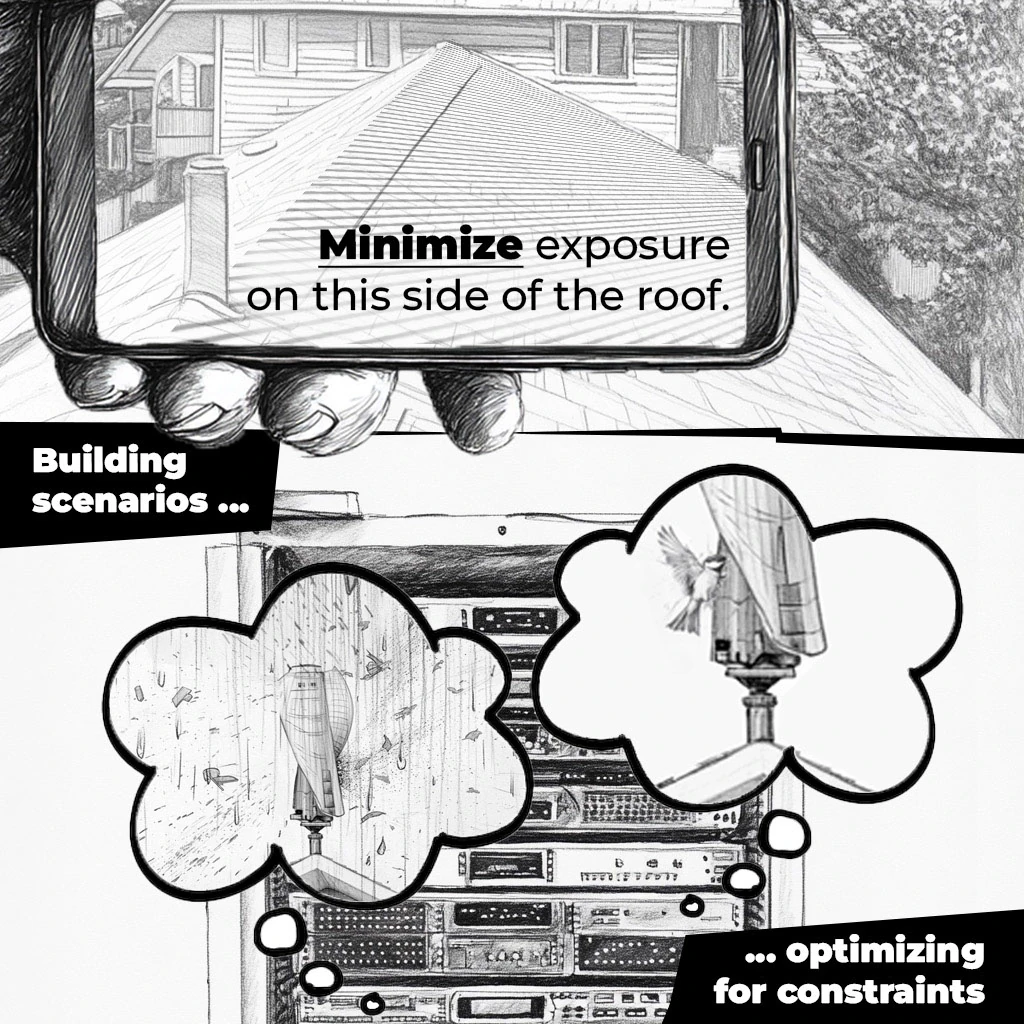

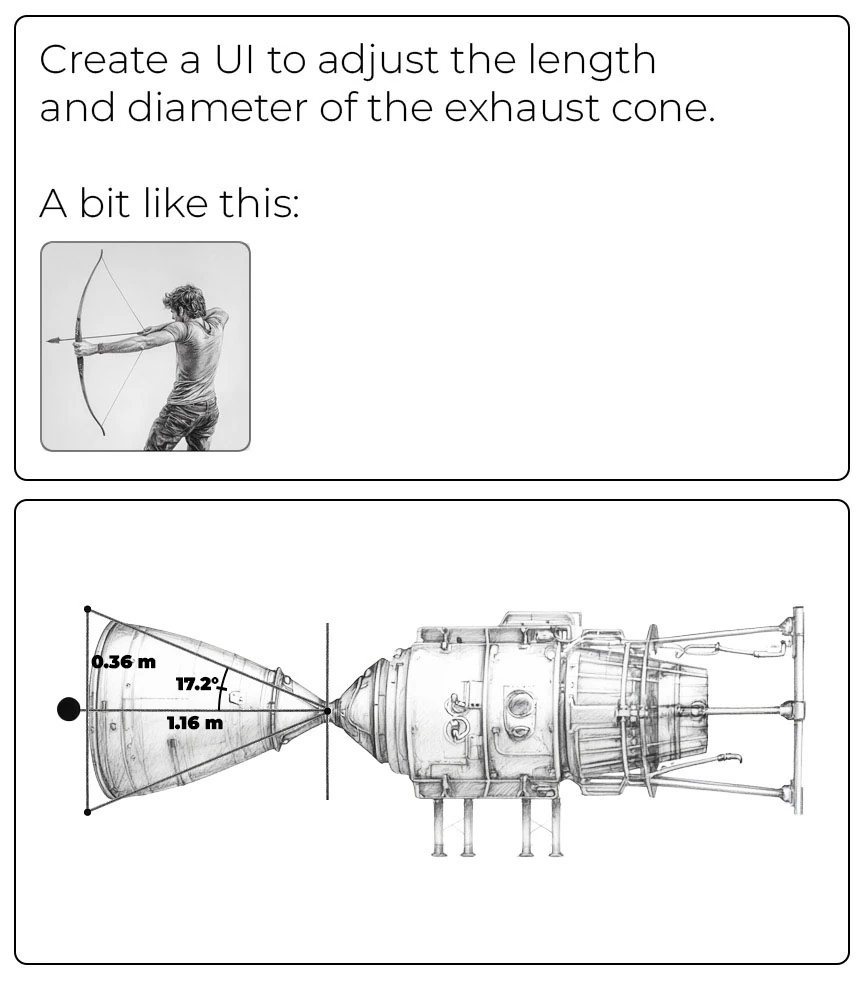

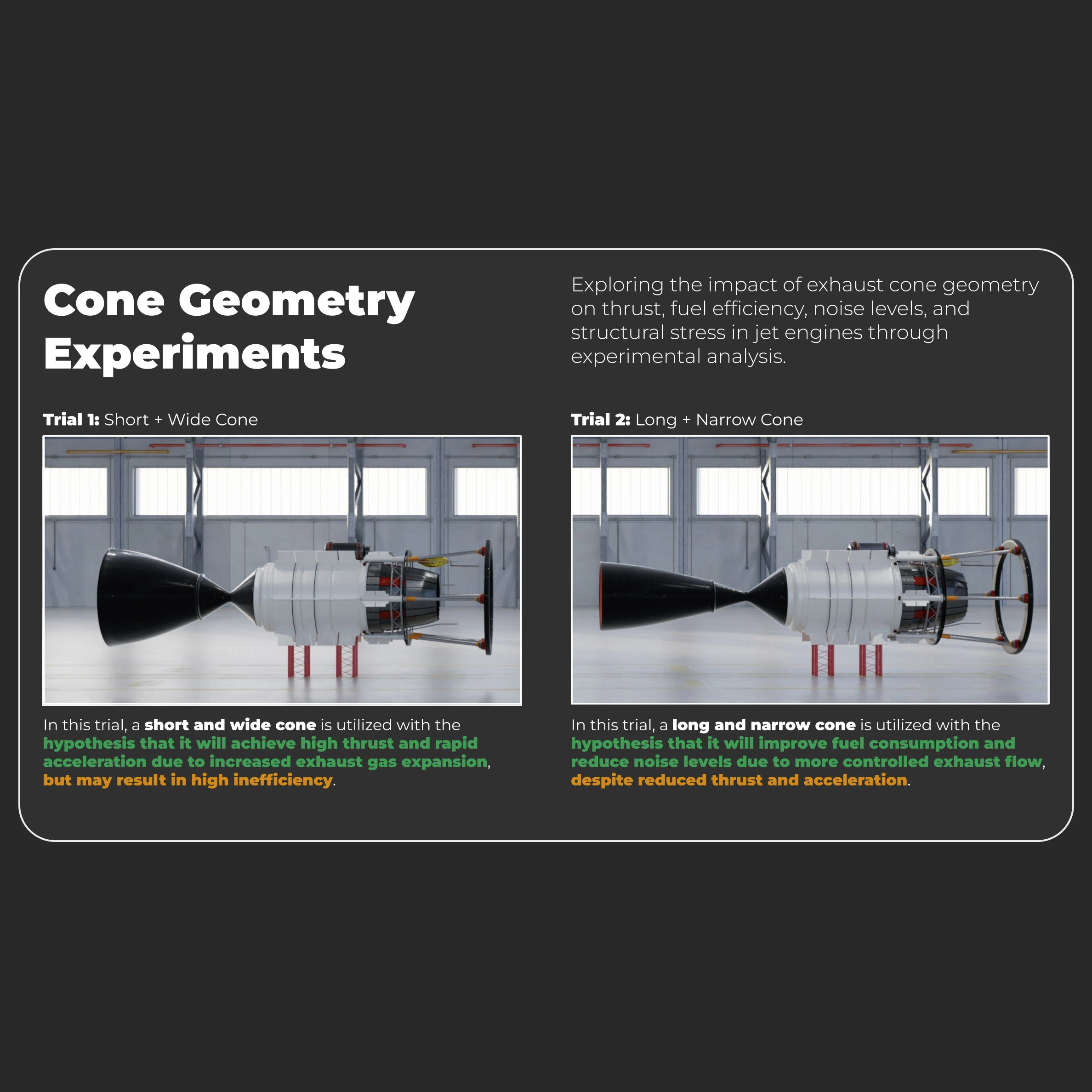

As tools gain the ability to decompose problems, generate solutions, and conduct experiments to explore novel approaches, the way users interact with them must evolve. Traditionally, design and engineering tools have operated within a command-and-control paradigm, where the user catalyzes every action and manages every detail through call-and-response interactions. By contrast, agent systems can orchestrate numerous parallel low-level work streams and experimentation processes, shifting the user’s role from primarily managing details to providing high-level direction and oversight.

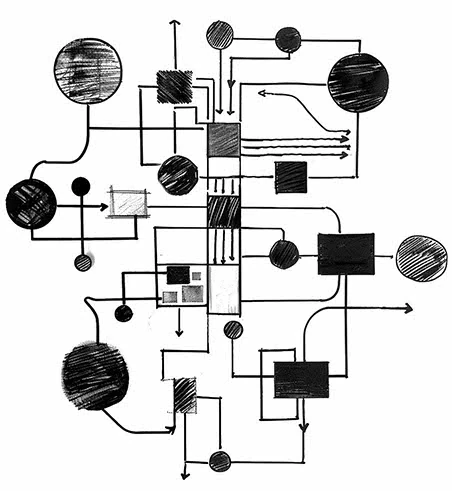

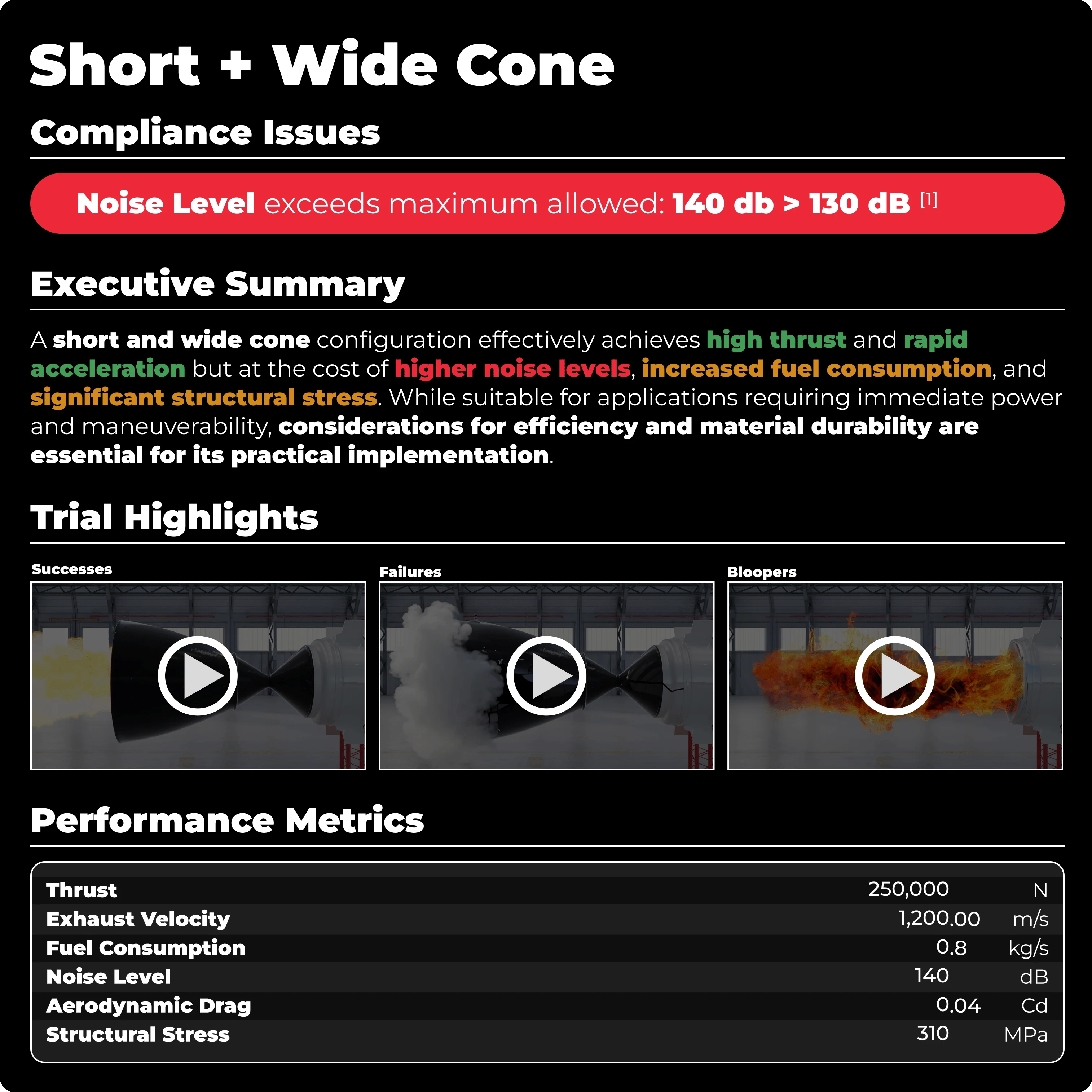

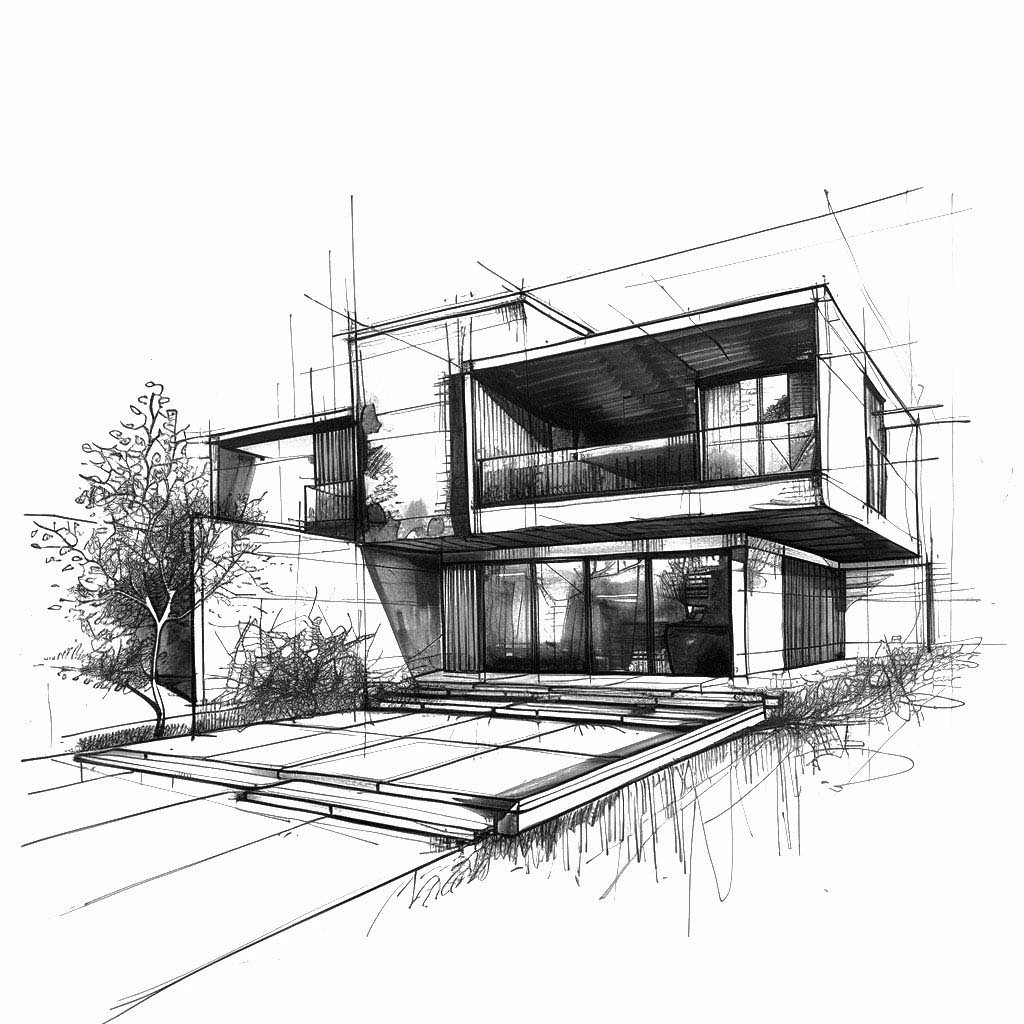

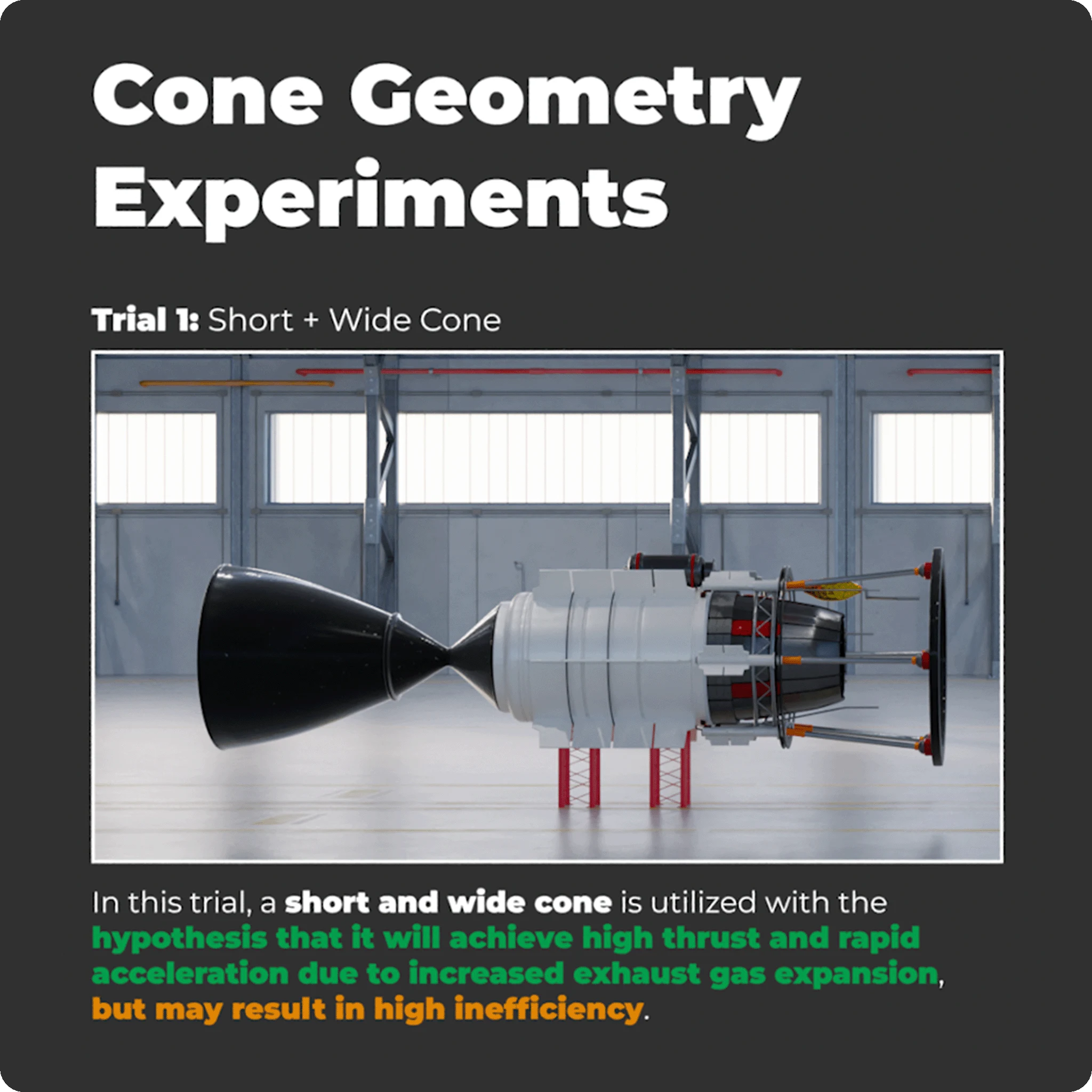

An experiment summary for a rocket cone design

To support this new mode of interaction, tools will need interfaces that enable high-level control and monitoring. Experiment Dashboards provide purpose-built interfaces for monitoring multiple experiments, displaying progress, outcomes, and key insights. In addition to passive reporting capabilities, these dashboards can serve as decision-making hubs, allowing users to actively steer efforts and refine objectives as experiments progress.

Mechanisms such as Editable Summaries allow users to refine or redirect the system’s explorations by updating a description, modifying an equation, or revising optimization criteria to produce a new instance of the experiment.

These interfaces will enable users to collaborate with advanced, exploratory systems while maintaining control over the project’s goals, constraints, and broader sensibilities. For legacy users or those with specific adjustments in mind, traditional interfaces for manual control can still be generated on demand.

The painstaking, step-by-step processes associated with traditional design tools often cause users to get lost in implementation details rather than focusing on expressing their ideas. Consider the difference between performing a gymnastics routine and creating a keyframe animation of it – one feels natural and expressive, the other tedious and mechanical.

Creative flow can be glorious, but it is also quite fragile. Users lose it when forced to adapt their thinking to a tool that feels unnatural or unfamiliar. They lose momentum when tools demand constant focus on low-level details. AI-enabled tools offer an alternative, allowing users to work at a higher, conceptual level while AI agents handle the details behind the scenes.

AI enables creative tools to adapt dynamically, delivering just-in-time functionality and interfaces that are always perfectly suited to the task at hand and the user’s approach.

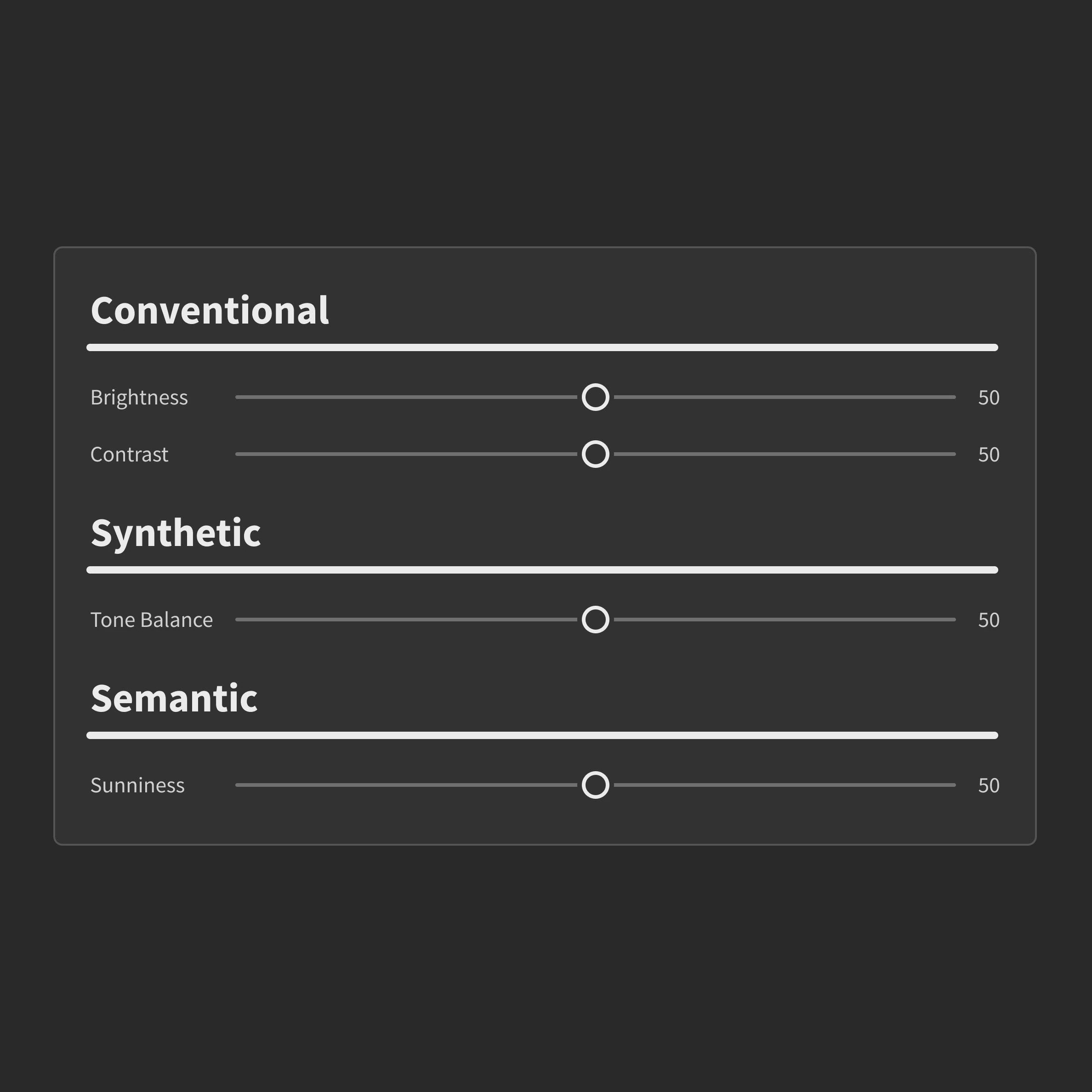

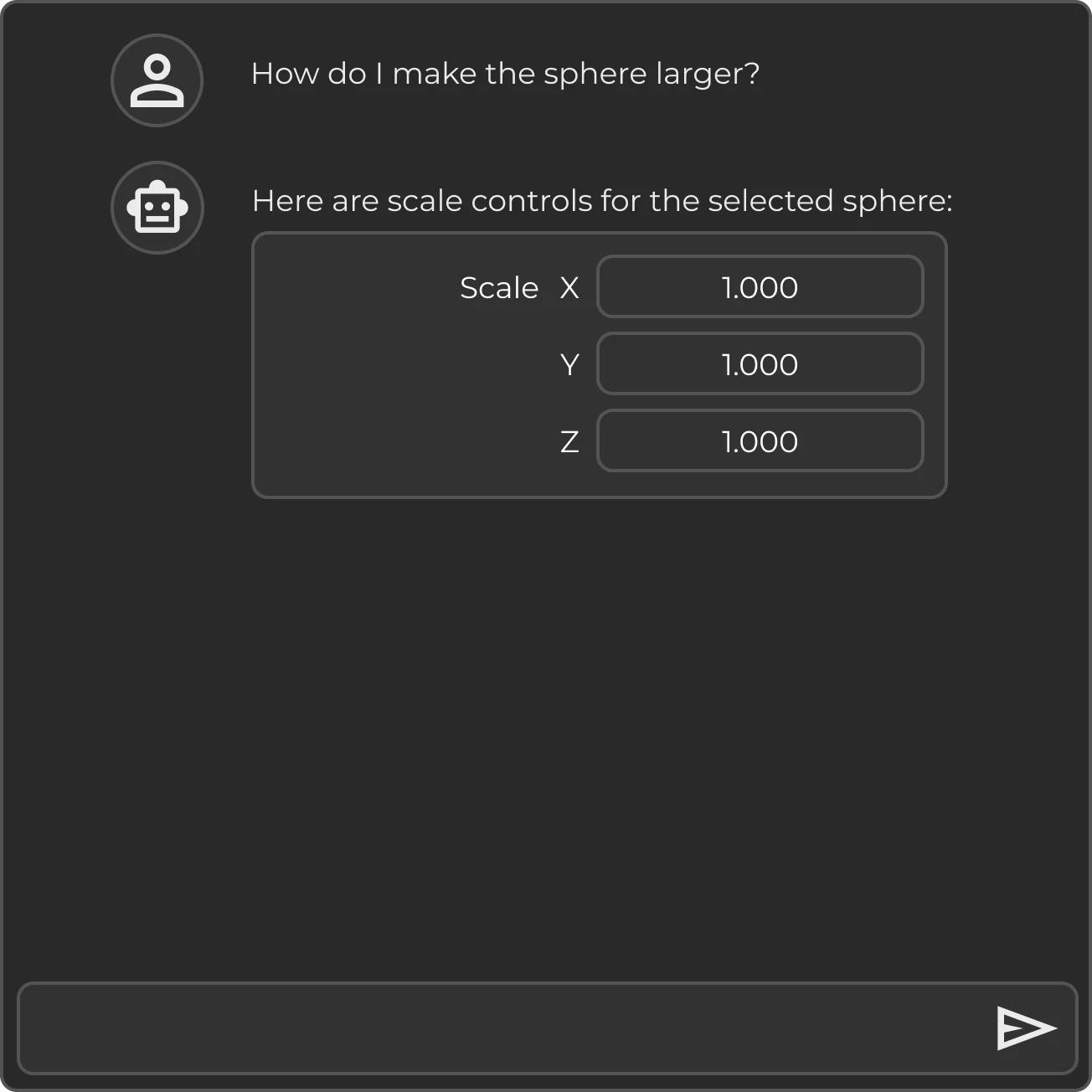

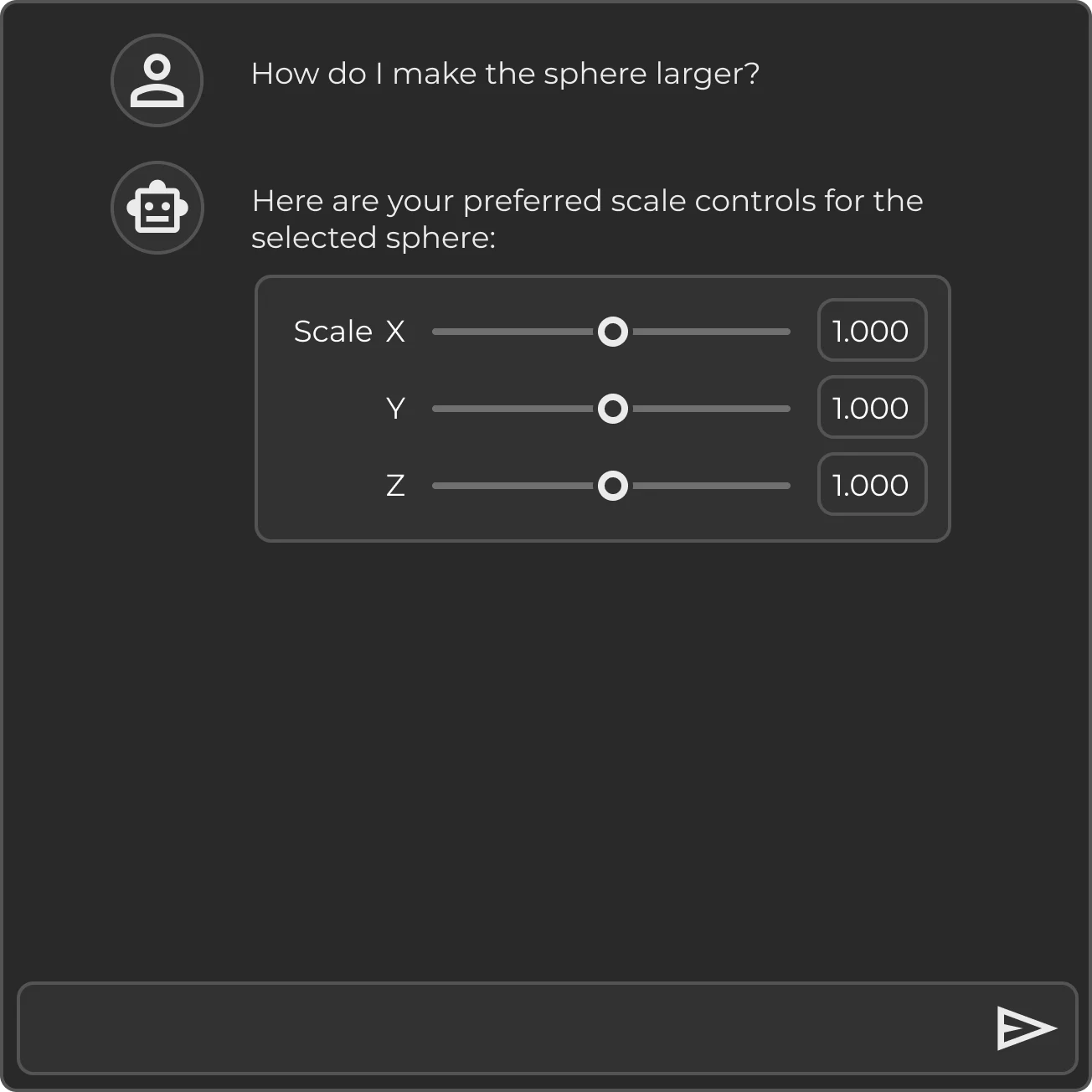

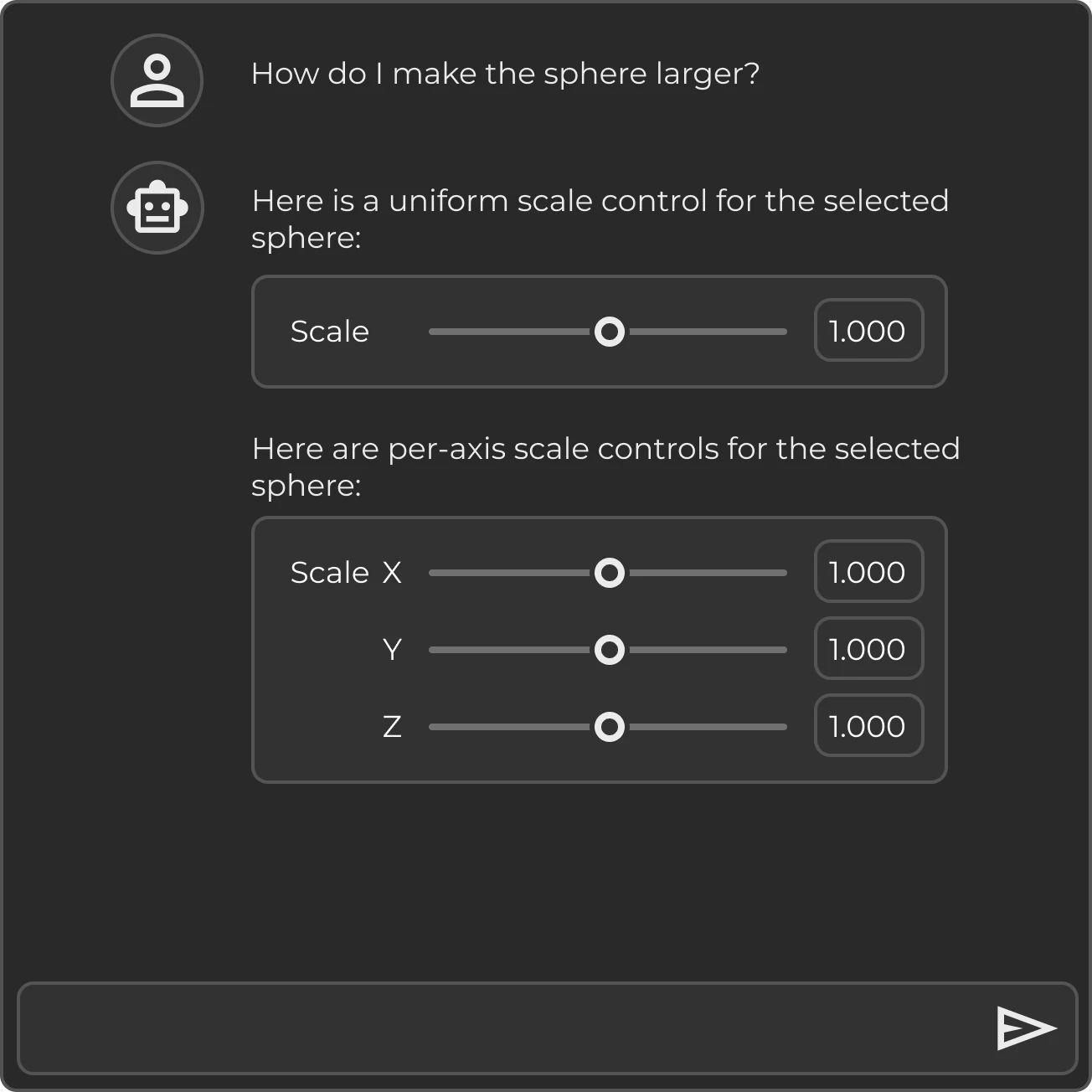

Property Panel

A JIT collection of familiar controls gathered outside of the canvas for easy access and manipulation of conventional, synthetic or semantic attributes.

Direct Manipulation

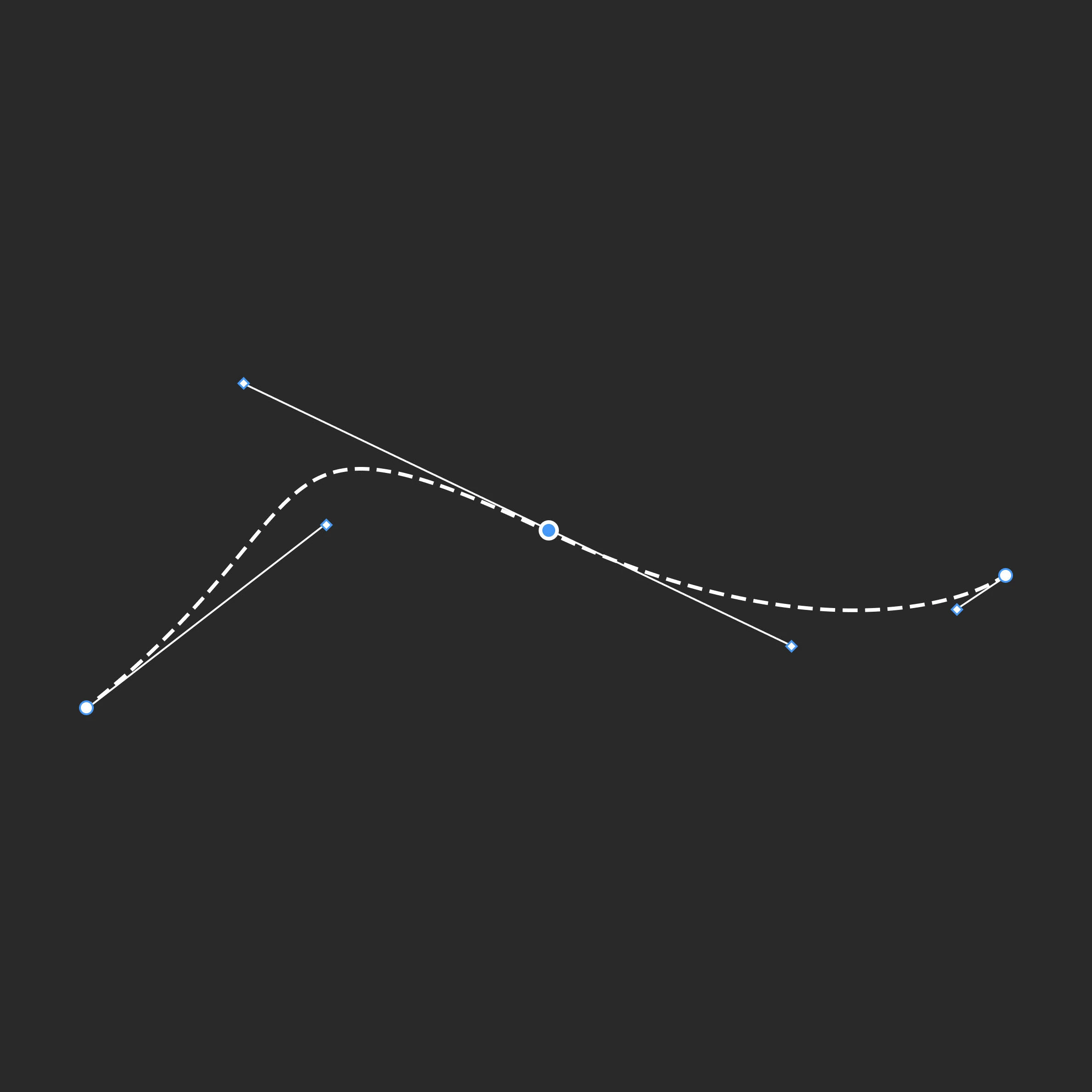

Interactive in-canvas tools, like Bézier curve editors and 3D transform gizmos, that allow precise adjustment of geometric attributes directly on the design elements.

Semantic Manipulation

In-canvas tools that intuitively adjust content-specific attributes for precise and relevant modifications directly on the design elements.

Design-by-Description

Text input tools that allow users to generate design elements by describing the desired output, enabling intuitive creation with natural language.

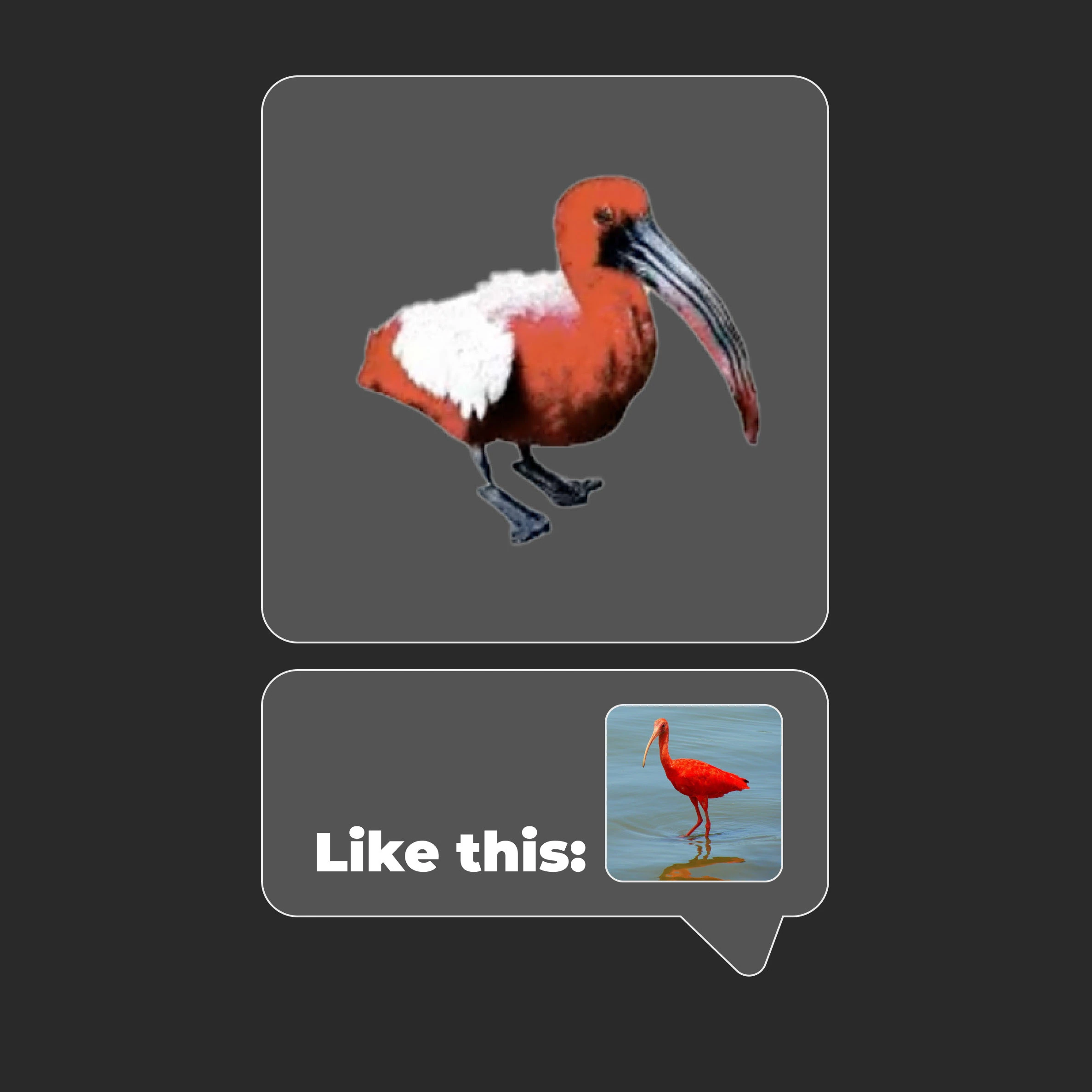

Design-by-Reference

Tools that enable users to generate design elements by providing reference media, facilitating intuitive creation based on visual examples.

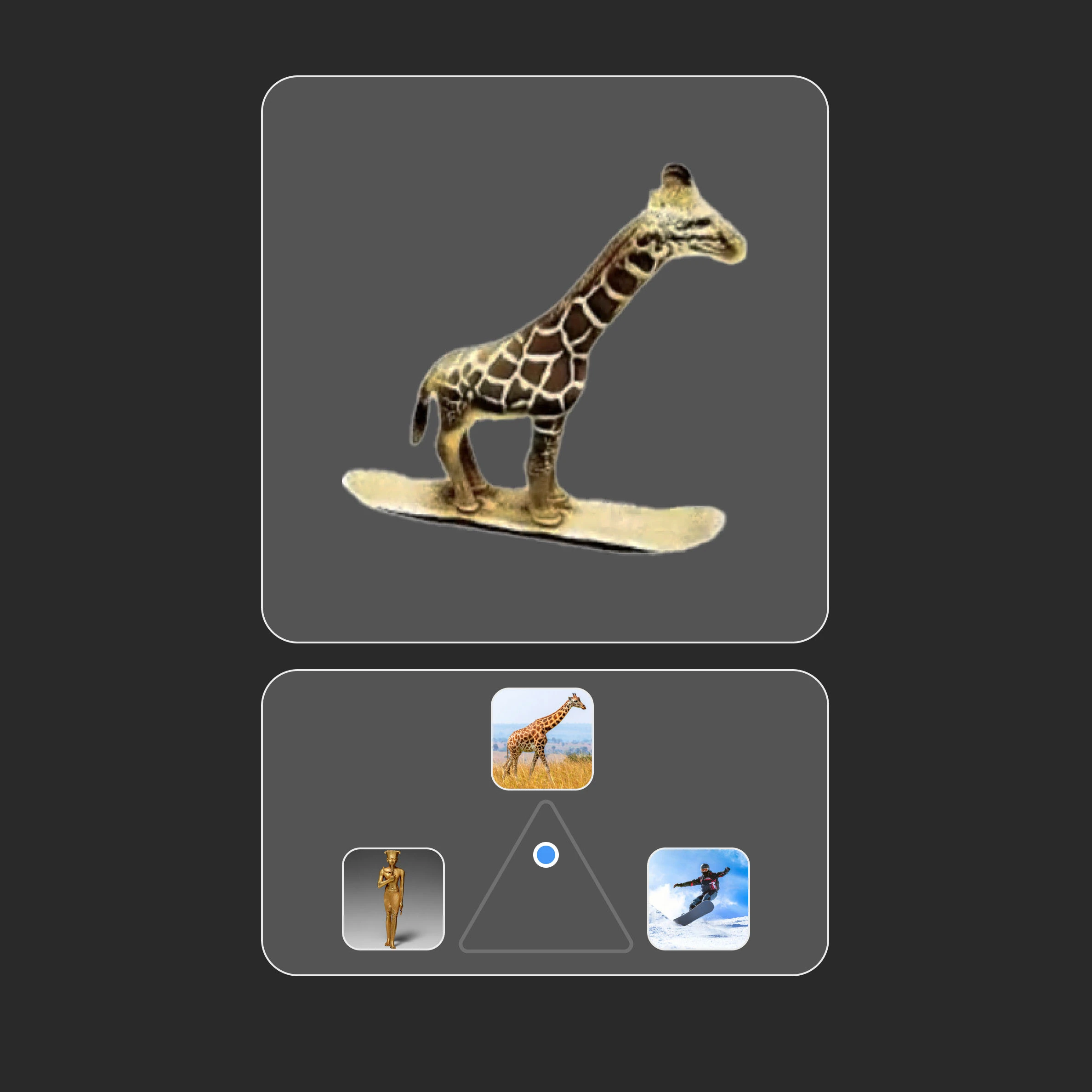

Design-by-Inspiration

Tools that allow users to generate design elements by providing multiple reference media, with the ability to weight their relative influence on the output.

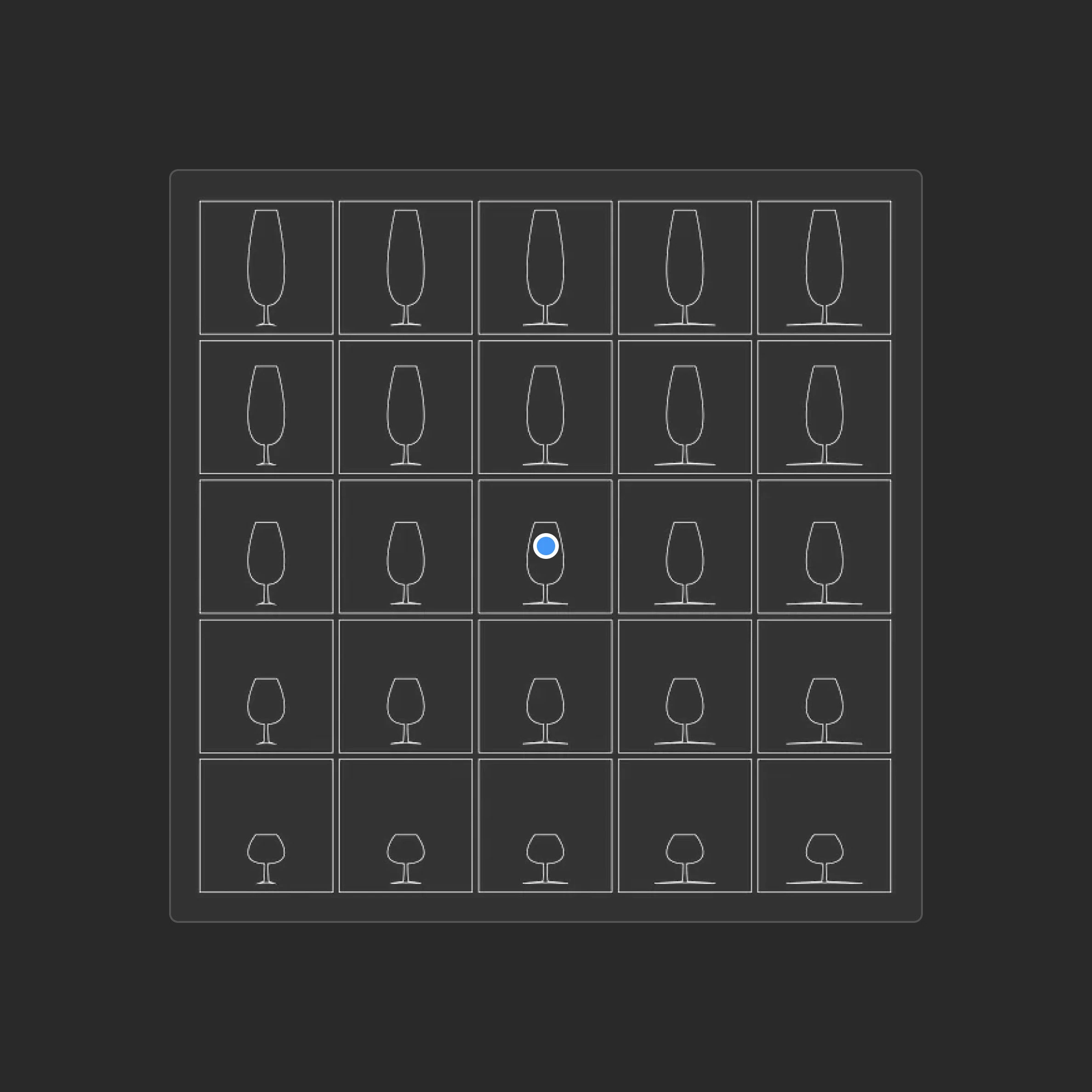

Latent Navigation

Tools that use dimensionality reduction to organize a variation map, allowing users to visually and intuitively select the optimal combination of attributes.

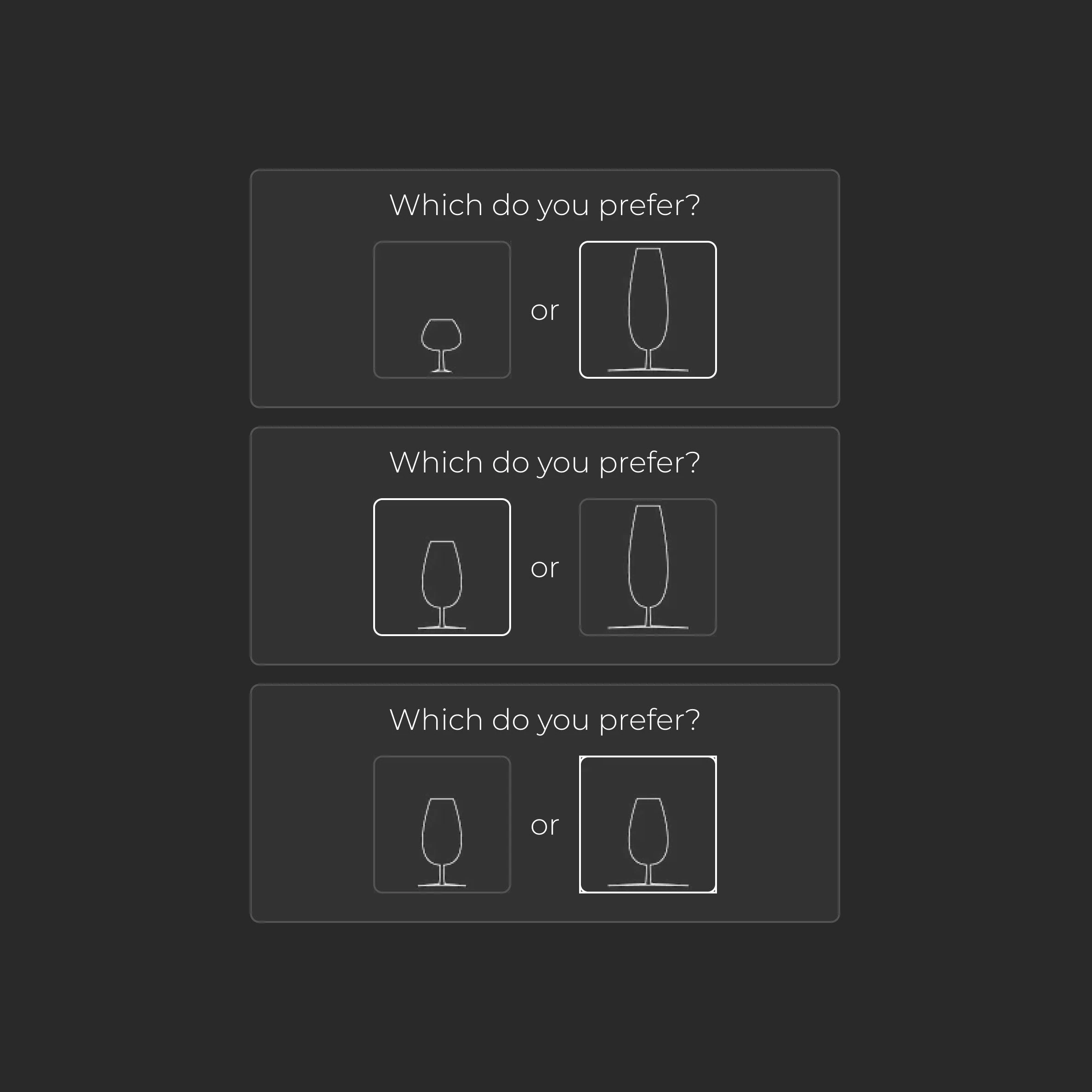

Domain Narrowing

Tools that use tactics like pairwise comparisons or 20 Questions to guide users in selecting preferences. The algorithm hones the desired attribute combination through iterations.

Constraint Solving

Tools that let users set goals and constraints for high-level attributes and automatically optimizes low-level parameters to achieve the desired balance.

Experiment Workspace

A high-level dashboard offering oversight of multiple experiments, enabling users to monitor progress, direct research efforts, and make strategic adjustments.

Editable Summary

Generated summaries act as editable overviews; each adjustment launches a new experiment version, giving users high-level, conceptual control over its direction.

User Directive

Just-in-time requests tailored to specific questions or decision points, guiding users to clarify goals and keep agent actions aligned with objectives.

In the early days of generative AI adoption, it is clear that while LLMs can, to some extent, level the playing field for implementation-related skills, other forms of creativity, expertise, and resourcefulness remain key differentiators for the human operator.

Some users approach a new tool with an intrepid spirit, finding clever ways to adapt its capabilities to their specific needs, while others stick to the suggested use cases and go no further. LLMs should, in theory, make complex tasks equally accessible to all, but their reactive nature means that their usefulness depends on what the user thinks to ask of them.

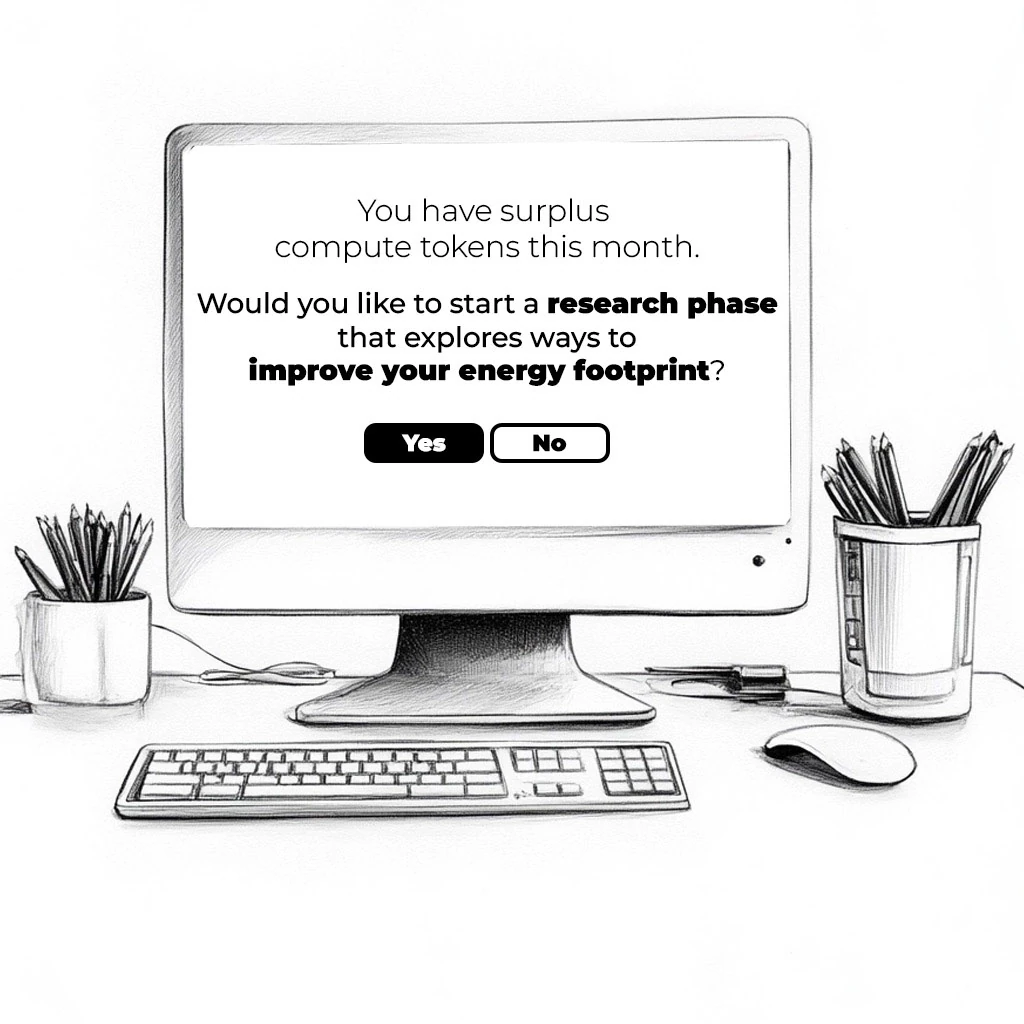

One of the most impactful new ways creative tools can support users through AI is to play a more proactive role in anticipating needs, identifying unconsidered opportunities, and uncovering challenges that might otherwise go unnoticed.

Design and engineering problems can be very complex, especially when considered from materials and supply chains all the way to manufacturing and distribution. Optimizing across such a large number of interconnected considerations can be challenging for a human.

A few user directives

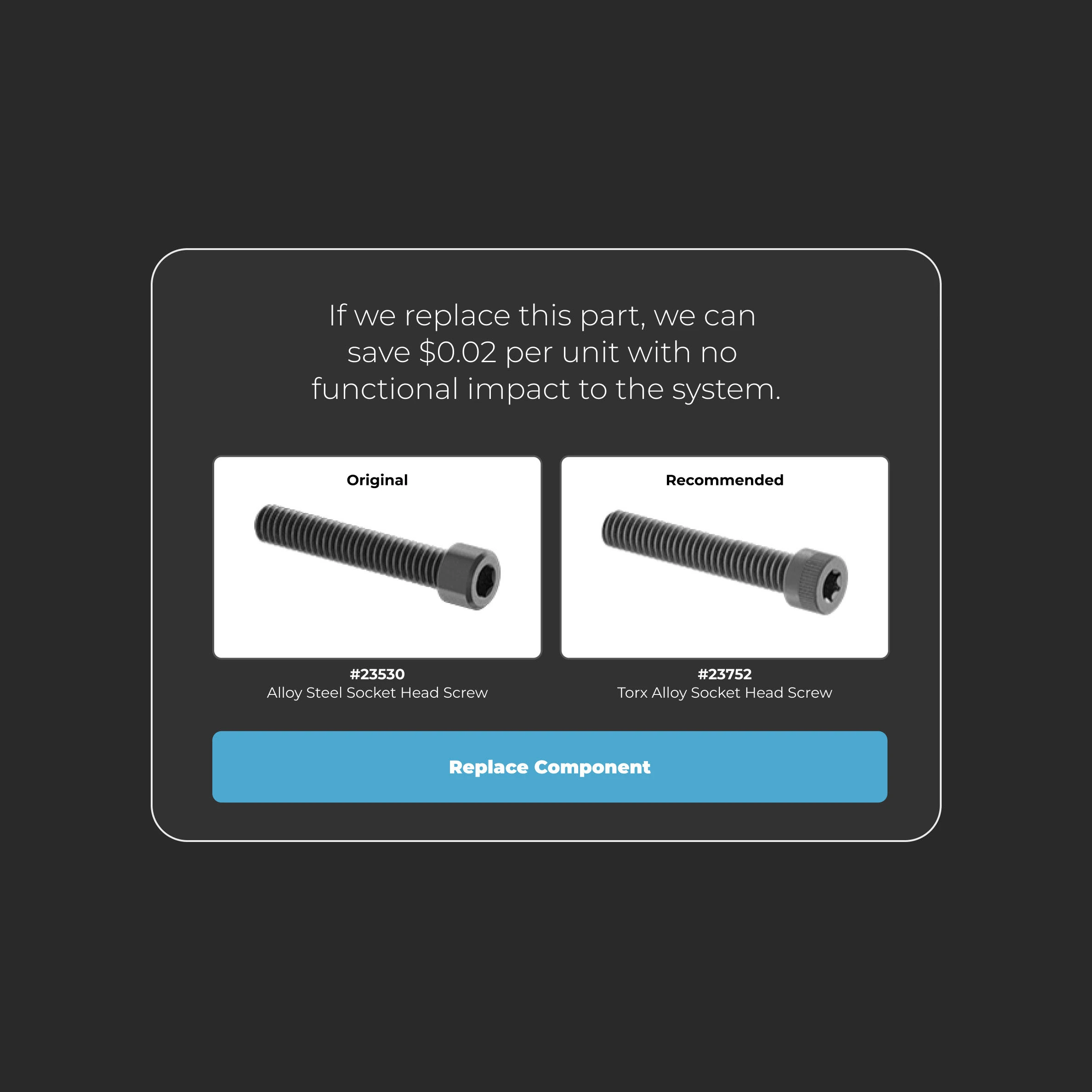

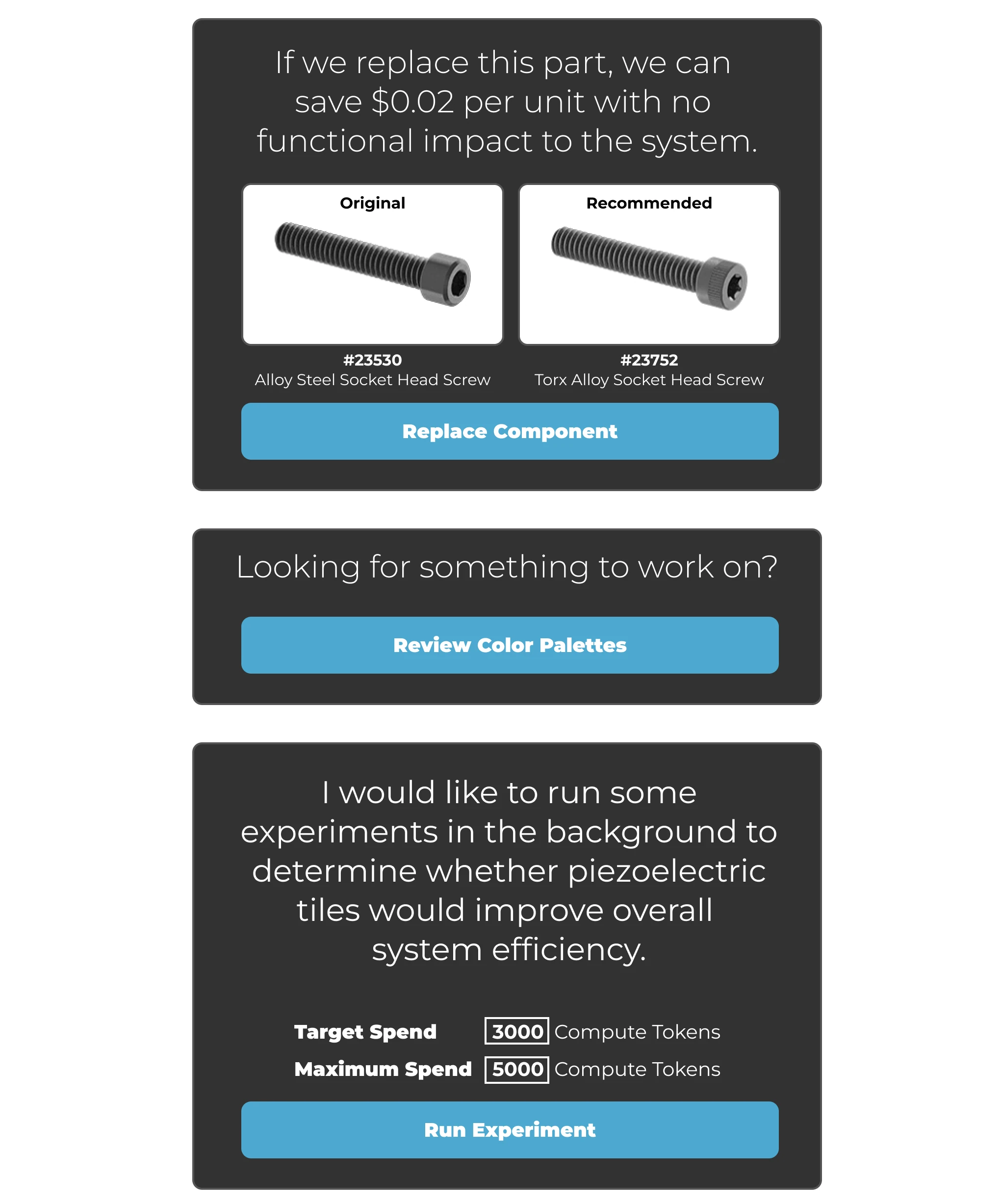

AI-driven tools can proactively support users in addressing this complexity by identifying ways to optimize a design for factors like cost, environmental impact, or performance. They could suggest ways to refine design attributes to better align with the user’s goals and constraints, such as adjusting a CAD model to reduce material waste or swapping out components for more efficient or cost-effective alternatives. These mechanisms can also help users see their work from new perspectives, such as by suggesting additional use cases or go-to-market strategies for a product.

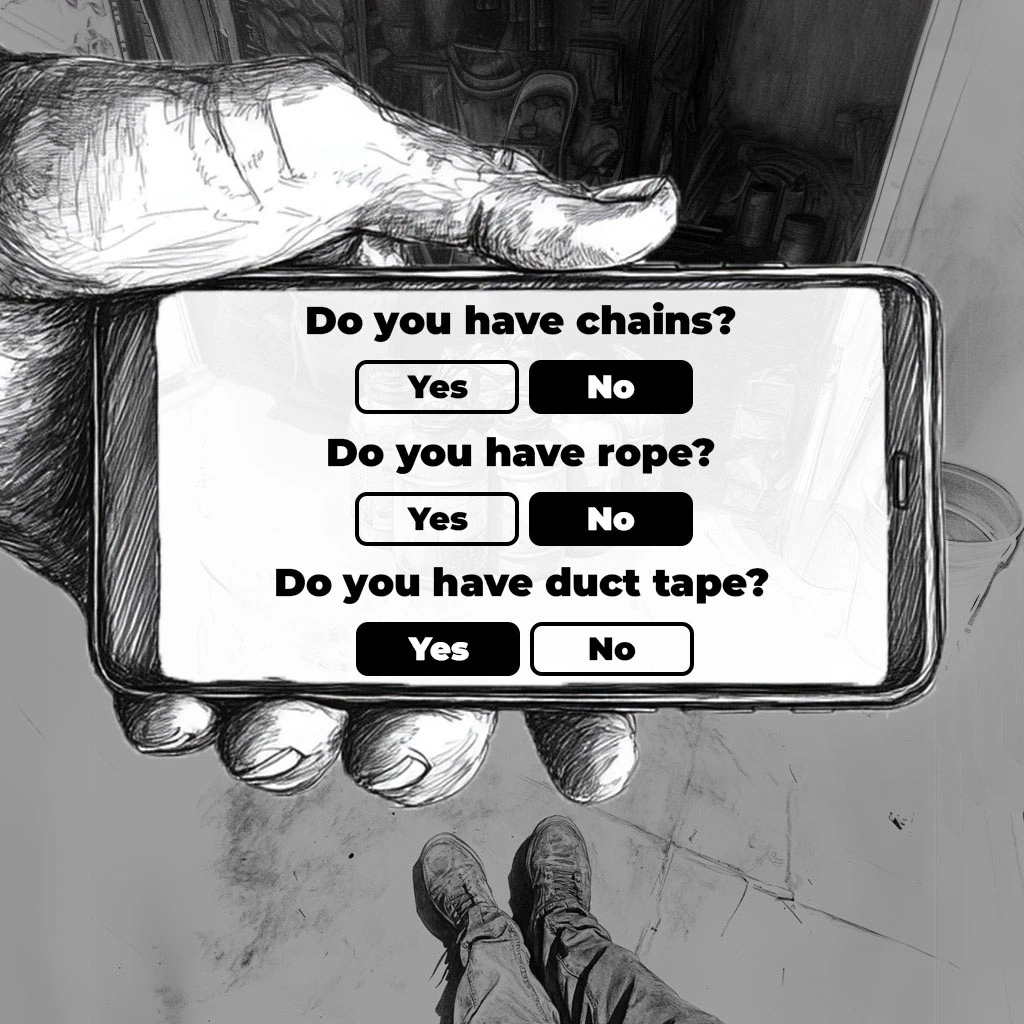

Some early agentic coding platforms attempt to work autonomously after receiving the user’s initial prompt, but this approach often falls short, as ambiguous decisions that arise mid-process may require user clarification. Through incremental engagement, the tool can address considerations the user may not have specified upfront and ensure continued alignment with his or her goals.

As thoughtful collaborators, agents should raise questions, suggest activities when users are passive, and propose background studies as new opportunities arise. The cadence of these interactions doesn’t need to follow a rigid call-and-response pattern. Agents could instead autonomously develop a hypothesis, run experiments to test the idea, and then present a new strategy or a drop-in replacement for an existing component to the user.

Above all, agentic systems in design and engineering software should serve as intellectual foils, helping users stretch their thinking and explore alternative perspectives.

In the introduction to his seminal book Mindstorms, Seymour Papert makes a potent observation:

As millions of "Star Trek" fans know, the starship Enterprise has a computer that gives rapid and accurate answers to complex questions posed to it. But no attempt is made in "Star Trek" to suggest that the human characters aboard think in ways very different from the manner in which people in the twentieth century think. Contact with the computer has not, as far as we are allowed to see in these episodes, changed how these people think about themselves or how they approach problems. (Papert 4)

Papert’s insight underscores what should be a critical goal for AI in creative tools: to go beyond merely solving problems for users and instead help transform how they think about the problems and possibilities at hand. By serving as proactive collaborators, these tools can help elevate human creativity to new heights.

Design and engineering projects often span numerous disciplines, with work fragmented across software, teams, and roles. Important contextual information is often lost as components are passed between tools and collaborators. AI can bridge these gaps to unify workflows, foster collaboration, and uncover opportunities for optimization and cohesion.

In this essay, I have frequently referred to “the user” in much the same manner as would be appropriate for the discussion of conventional digital creation tools. As AI reshapes the architecture of creative tools and how we interact with them, the concept of the user will need to evolve beyond its traditional definition.

Within the interaction paradigm proposed in this essay, individual users can still work in a conventional command-and-control manner if they choose – decomposing problems themselves and executing granular commands to achieve their goals. Alternatively, the user can articulate his or her high-level intent and leave it to agents to decompose the problem and execute the details under the hood.

In addition to supporting individual users, AI-driven tools enable new forms of human collaboration. In legacy software, so-called 'collaboration tools' often amount to little more than shared workspaces, where users zip around the design making haphazard changes and inadvertently undermine one another.

One powerful way that AI can unlock new forms of human collaboration is by acting as an "adjudicator" that gathers input from multiple stakeholders independently and synthesizes their perspectives into cohesive solutions that balance competing priorities and concerns. For example, when designing a new office building, the system could integrate incoming employee preferences for workspace layouts and amenities into a unified plan that serves the collective needs of the group. Users in this setting could, of course, utilize the same set of personalized just-in-time interfaces as would be available to individual users.

The concept of a “user” extends further still, encompassing even passive contributors. For instance, employees working in an existing office environment generate data through their everyday actions – how they move through space, where they congregate, and which areas are consistently underused. Without making any explicit requests, their behavior can inform the system’s reasoning.

In these ways, AI broadens the notion of a “user,” inviting participation from individuals, groups, and even those who never explicitly interact with the tool. By drawing on both active input and observed behavior, these systems can better serve the diverse needs and goals of everyone affected by design and engineering efforts.

Though computers have always lived under desks and in windowless warehouses, they are now coming into the light. In the AI age, computers can interact with the world beyond their physical confinement, gathering data from diverse and distributed sources like APIs, sensors, and social media while taking action through generative systems that enable coordination, communication, and the creation of media assets. Dario Amodei provides an excellent framework for thinking about how to leverage these decentralized capabilities.

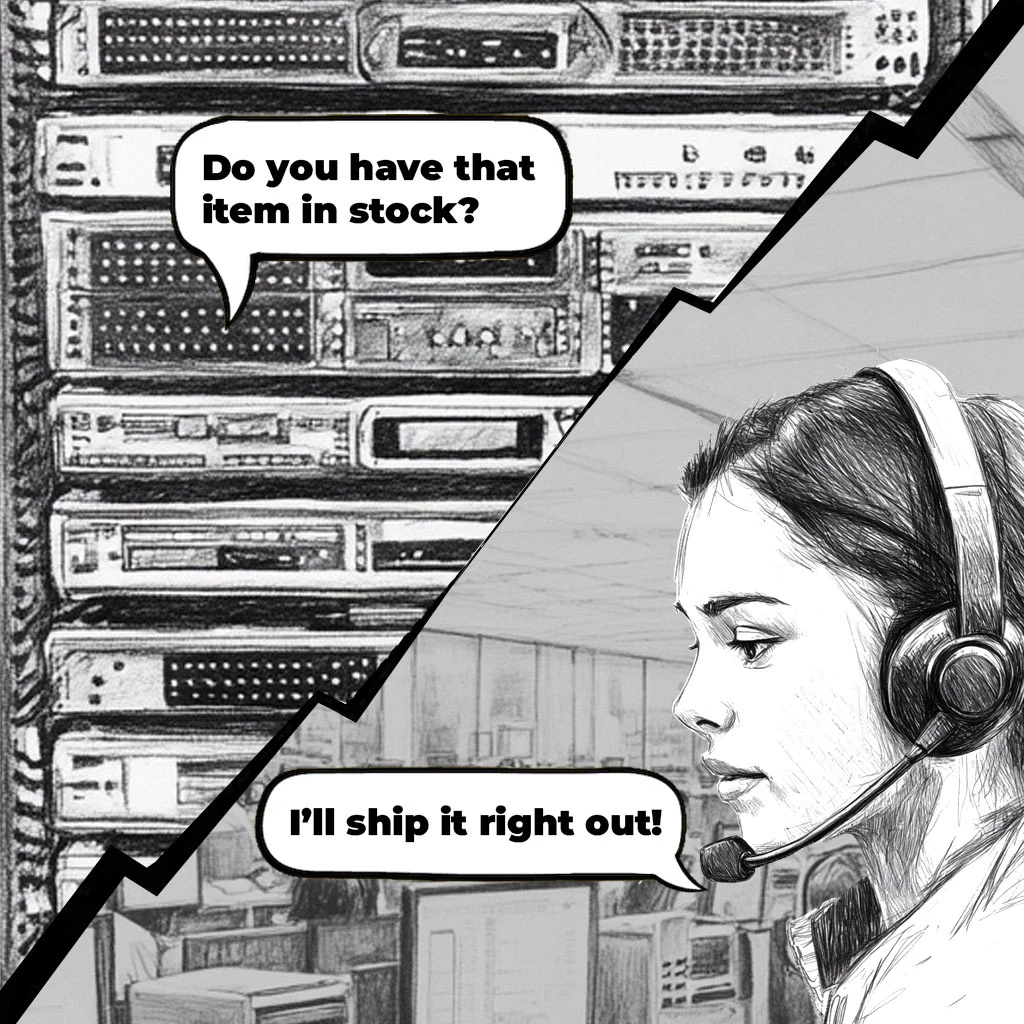

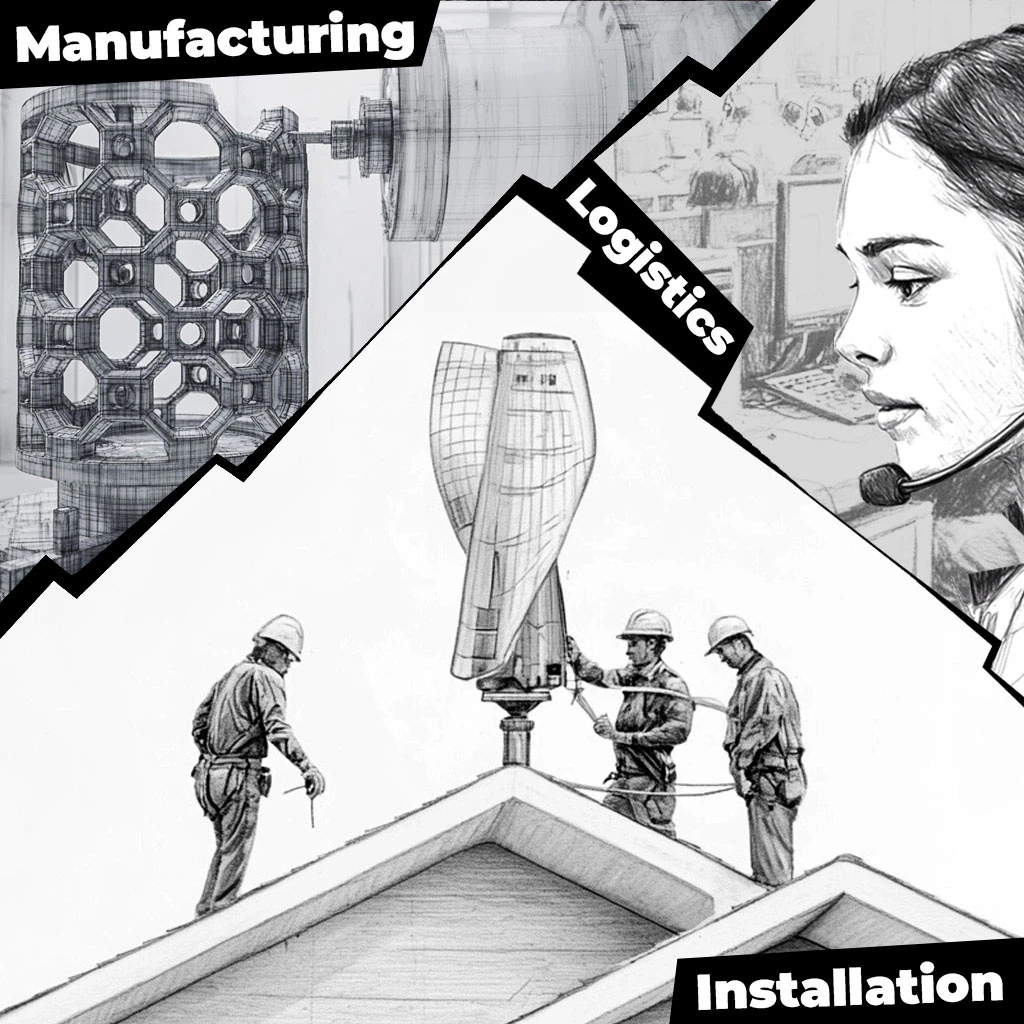

Systems of this kind can be used to bridge the gap between digital tools and real-world outcomes – without the need for humanoid robots. Agents can draft work orders, create CAD files, coordinate designs with manufacturers, generate production schedules, and communicate with vendors. By sensing and acting in the physical world through decentralized capabilities, these systems will be able to coordinate production, manage supply chains, and transform designs into manufactured products.

Let’s take a closer look at some of the ways AI can contribute to these processes by acting as a dynamic collaborator across domains to solve real-world problems:

Ideation

Help users quickly visualize rough concepts, enabling them to explore ideas before committing to detailed development and realization.

Data Gathering

Integrate data from sensors and web sources, delivering it to the right contexts to inform users and agent systems.

Responsive Workstreams

Offer contextually-appropriate tasks and generate corresponding UIs so that users can stay engaged wherever they go.

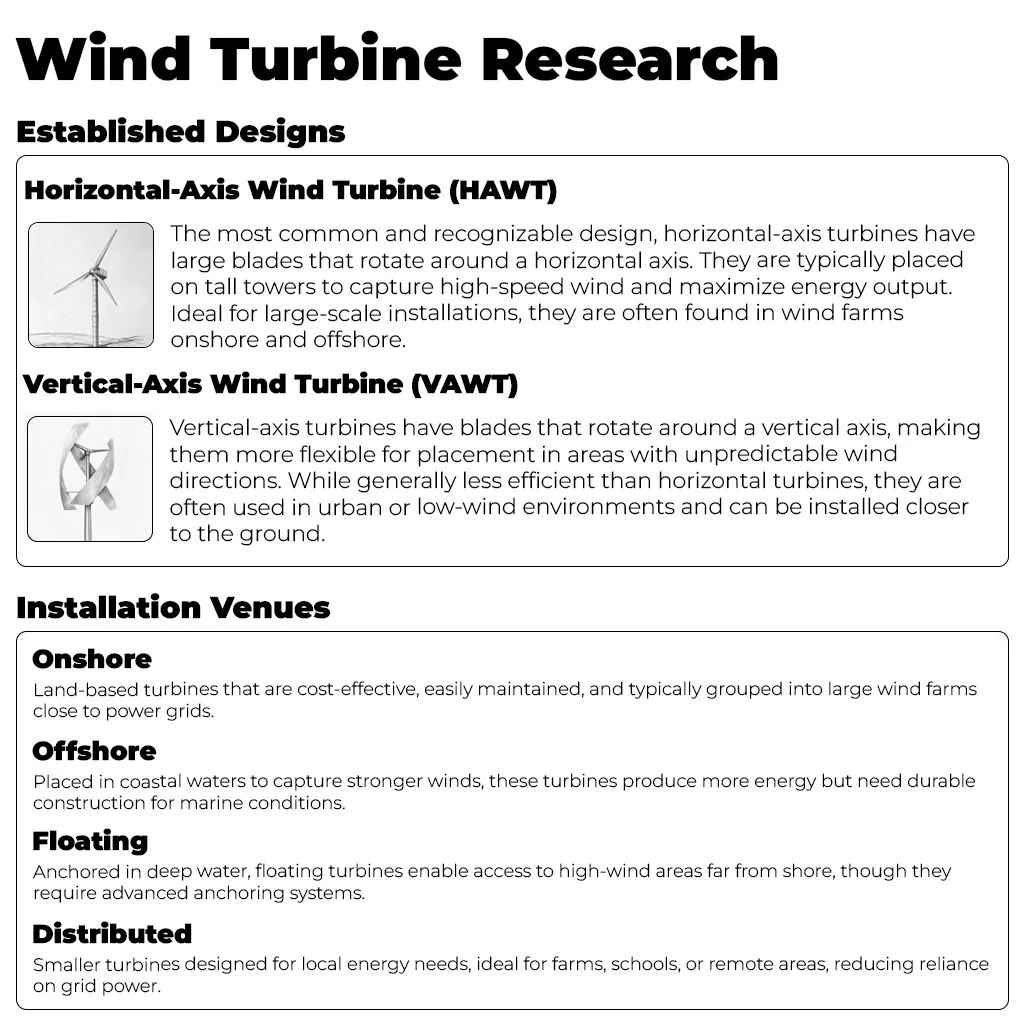

Domain Research

Conduct agentic research on existing solutions, best practices, and underlying principles to ensure users benefit from proven methods and existing knowledge.

Domain Education

Provide just-in-time educational materials and interactive courses. Inform decision-making by helping users understand how component decisions impact overall goals.

Responsive Design ++

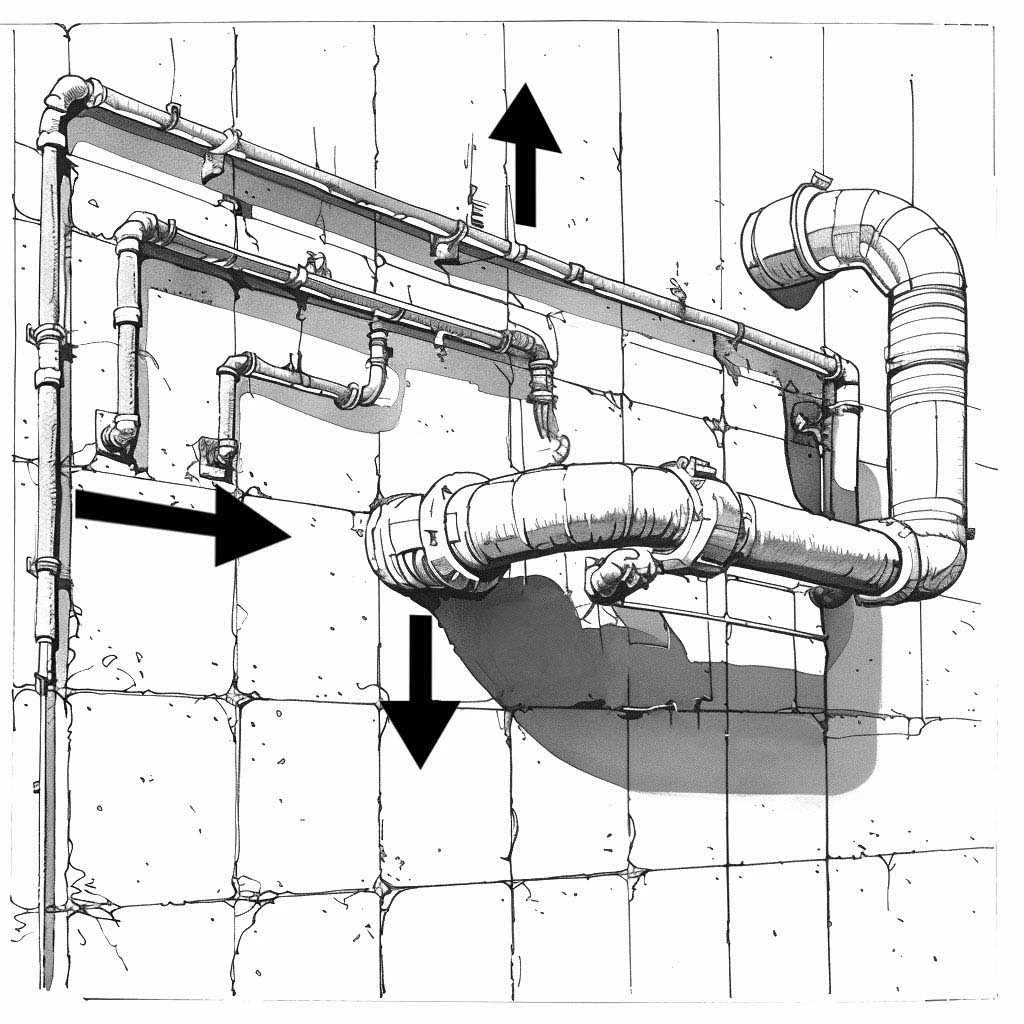

Automatically refactor interdependent components in relation to the user’s edits across teams and external contractors. Adjust a car's shape, and wirefeeds update; move a wall, and the HVAC layout adapts.

Logistics

Manage supply chains, schedule construction and production, coordinate workstreams, and interface with external data sources.

Safety + Regulation

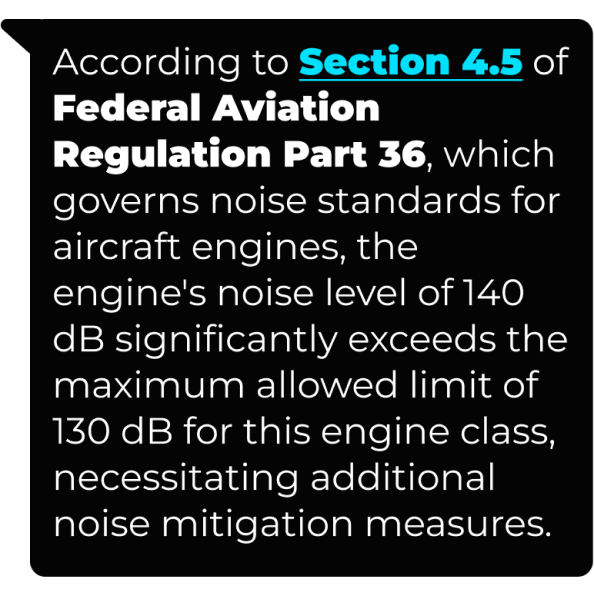

Conduct regulatory research, author safety plans, manage filing for permits and testing, ensuring the user’s work is in compliance with guidelines and standards.

Legal

Conduct legal research, author patents, and create Terms-of-Service documents, ensuring legal compliance throughout the user’s project.

Sourcing

Refactor designs around factors like parts availability, cost, durability, and performance. Inform users’ decisions with agentic trade-off analysis and automate purely beneficial adjustments.

Manufacturing

Optimize designs for manufacturability to enhance reliability, reduce waste, simplify processes, and boost durability using AI guidance and automated adjustments.

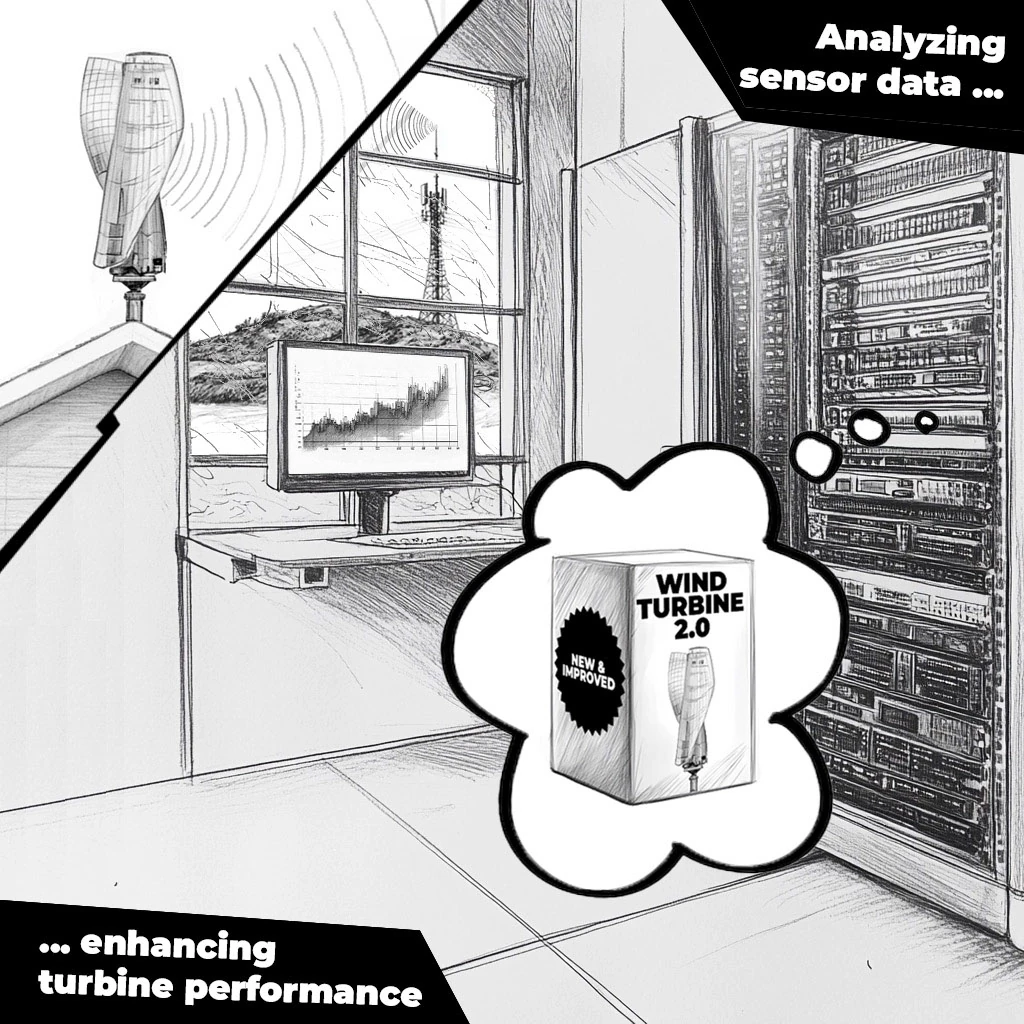

Post-Deployment

Analyze sensor data, product analytics, and user feedback to automatically enhance future product iterations with crowdsourced insights.

Go-to-Market Strategy

Create a winning plan for bringing the user's product to market. Develop GTM strategy by researching the product landscape, user needs, and market openings.

Marketing

Generate and update marketing materials and advertisements. Target the right customers and keep content aligned with product changes.

Customer Experience

Automatically generate user manuals and support materials as well as provide live customer and technical support that utilizes internal knowledge of the product.

Disruptions in the software industry have generally focused on improving tools without requiring significant changes to the existing roles and pipelines in which those tools are embedded. The coming transformation of design and engineering software will be far more profound.

The capabilities discussed in this essay suggest a shift in how project ideas originate, who contributes to their development, and how they flow between disciplines. This shift will not only reshape the tools but transform the industries they serve – changing how work is done, how businesses operate, and who can contribute to the process. It is not just an upgrade to the software within the pipeline, it is a fundamental restructuring of the pipeline itself.

The design and engineering software pipeline is both intricate and deeply interconnected, qualities that have largely shielded it from disruption over the past few decades. Let’s take a look at what is needed to catalyze its transformation in the AI age:

Some components of the design and engineering stack are difficult to replace for reasons of technical complexity, existing IP restrictions, or the lack of open-source offerings. While the proposed system aims to disrupt existing tools, certain elements will need to remain in their conventional form for the time being.

Over time, these components could potentially be rewritten. In other cases, paradigm shifts will render certain methods obsolete – such as the transition from explicit to implicit geometry representations. For now, though, the most expedient path will be to integrate permissively licensed open-source solutions where possible and adopt existing tools through licensing or partnerships when necessary.

| Computational Geometry | Physics | Graphics | Constraint Solving | Manufacturing |

|---|---|---|---|---|

|

Solid Modeling

Enables the creation and manipulation of solid geometries

Constructive Solid Geometry, Boundary Representation, Boolean Operations

|

Finite Element Analysis

Simulates physical phenomena under various conditions

Finite Element Method, Meshing, Stress Analysis, Strain Analysis, Deformation Analysis

|

Real-time Rendering

Provides immediate visual feedback during modeling

Rasterization, Z-buffering, Shading Models, Texture Mapping

|

Parametric Constraints

Maintains geometric relationships for parametric design changes

Constraint Solving, Parametric Equations, Dependency Graphs

|

Toolpath Generation

Creates CNC machining toolpaths based on part geometry

Toolpath Planning, Path Optimization, Collision Detection

|

|

Surface Modeling

Handles the creation and modification of complex surfaces

NURBS, Bezier Surfaces, Surface Tessellation, Subdivision Surfaces

|

Computational Fluid Dynamics

Optimizes product designs by simulating fluid flow and heat transfer

Navier-Stokes Equations, Turbulence Modeling, Heat Transfer Analysis, Mesh Generation

|

Ray Tracing

Produces high-quality photorealistic renderings by simulating the behavior of light

Ray-Object Intersection, Light Transport, Reflection and Refraction, Path Tracing

|

Assembly Constraints

Manages the positioning and movement of components within assemblies

Constraint Solving, Mating Conditions, Kinematic Analysis

|

Simulation

Verifies toolpaths and detects machining issues

Machining Simulation, Collision Detection, Tool Deflection Analysis

|

|

Mesh Modeling

Deals with polygonal meshes and their operations

Mesh Repair, Mesh Simplification, Smoothing Algorithms, Boolean Operations

|

Motion Simulation

Models the kinematics and dynamics of mechanical systems

Kinematic Equations, Dynamic Equations, Force Analysis, Torque Analysis

|

Visualization / UI

Supports advanced visualization techniques and UI rendering

Rasterization, Ray Tracing, Z-buffering, Anti-Aliasing, Occlusion Culling, Shadow Mapping, Texture Mapping

|

Geometric Constraints

Ensures specific geometric relationships and properties within designs

Distance Constraints, Angle Constraints, Perpendicularity, Parallelism

|

Post-Processing

Converts toolpaths into machine-specific code

G-code Generation, Machine Customization

|

Note: This overview does not cover all functional domains. Numerous domain-specific features in areas like photogrammetry can also be leveraged from existing products.

To use conventional components within AI-driven systems, these components must be adapted for integration with agent systems. This involves refining APIs, modularizing capabilities into scalable microservices, and authoring thorough documentation.

High-quality documentation for APIs and domain-relevant knowledge bases is critical for training LLMs and building retrieval-augmented generation (RAG) databases. Mechanisms to keep these resources up-to-date are also needed to ensure the system remains effective over time.

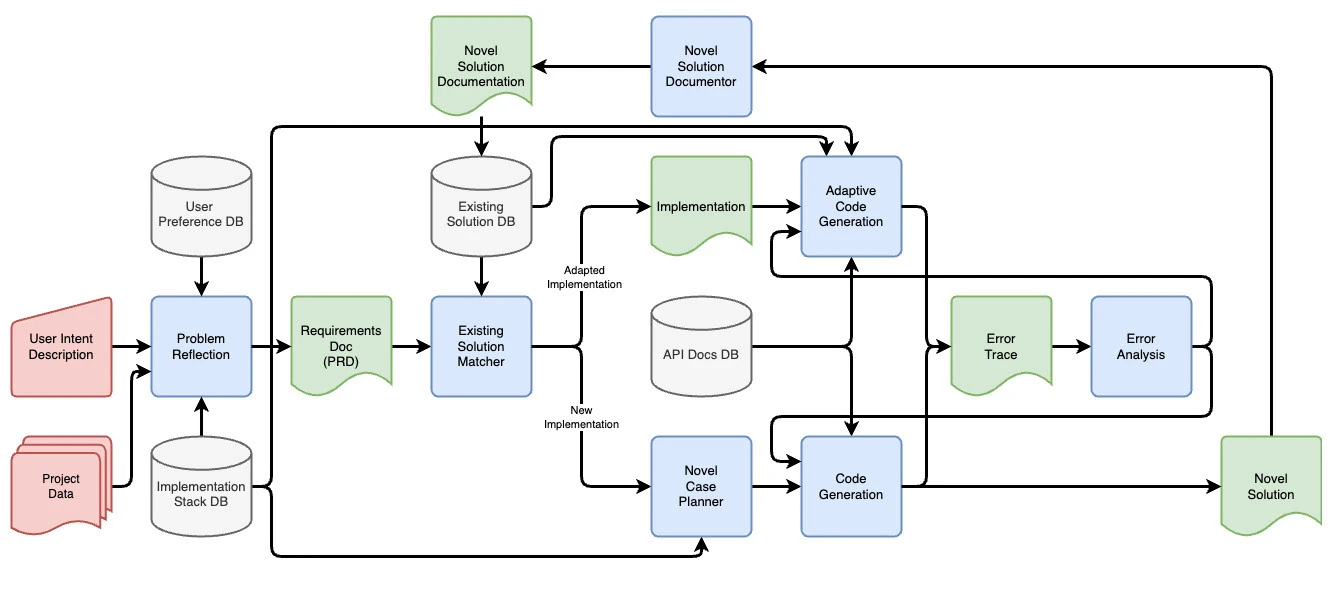

To develop an agent-driven platform for design and engineering, we need to devise a core architecture capable of reasoning through complex problems, breaking them into actionable parts, and orchestrating a wide range of actions from invoking conventional tools to engaging with users and coordinating vendors.

The core architecture consists of foundational components for reasoning, memory, generation, perception, and external systems integration:

| Reasoning | Memory | Generation | Perception | Externality |

|---|---|---|---|---|

|

Analyze complex problems, devise strategic solutions, and evaluate results to adapt and improve

|

Store, organize, and retrieve information to support learning and decision-making over time

|

Create code, content, solutions, and ideas

|

Interpret and integrate sensory and contextual data to make informed decisions

|

Leverage external tools and simulations to enhance capabilities, troubleshoot issues, and expand knowledge through exploration

|

|

Problem Analysis

Planning + Strategy

Exploration + Expansion

Decision-making

Reflection

|

Organization

Storage + Retrieval

Knowledge Management

User Awareness

Improvement

|

Code

Assets

Supporting Materials

|

Visual + Spatial Perception

Data Interpretation

Multimedia Perception

Interaction + Feedback

|

Tool Use

Simulation

Solvers + Optimization

Diagnostics

Research + Exploration

|

We should avoid being overly prescriptive in defining the core system architecture.

Though predefined architectures are easier to conceptualize and offer initial conveniences, they embed assumptions that limit the system’s adaptability. We should instead aim for a Minimally-Explicit Architecture – one that allows the system to evolve its memory structures, reasoning processes, and workflows as it learns from outcomes.

In addition to avoiding suboptimal paths tied to historical assumptions, a streamlined codebase built around a small, reconfigurable set of foundational components will reduce system complexity and improve maintainability.

This approach draws inspiration from the efficiency of natural systems, where simple building blocks and patterns are reused at numerous levels to construct complex systems, laying the foundation for an efficient and open-ended platform capable of tackling new problems as they emerge.

(In a follow-up essay, I will present a deeper technical exploration of the foundations for a Minimally-Explicit Architecture in agent-driven systems.)

The transformative potential of the technologies we’ve discussed can only be realized if individuals and institutions are willing to change how they work. This is a challenging proposition because architecture firms, product design companies, and similar organizations have spent years refining their workflows and have made substantial investments in tools, training, and infrastructure, creating a strong disincentive to adopt unproven alternatives.

Shifting to a new paradigm often comes not only with cultural resistance but also technical hurdles such as file compatibility issues and the challenge of meeting existing client and vendor requirements. To succeed, new tools cannot simply innovate, they must also provide a practical path for legacy users to transition to a new way of working.

Unlike previous generational shifts in software, workflow migration efforts will benefit from LLMs' ability to translate data structures or call specialized tools to handle legacy formats, significantly reducing the technical friction of adoption. When it comes to user readiness, though, levels of comfort and adoption will vary widely. While some will immediately embrace the new paradigm, others may require a more gradual introduction to build confidence and familiarity.

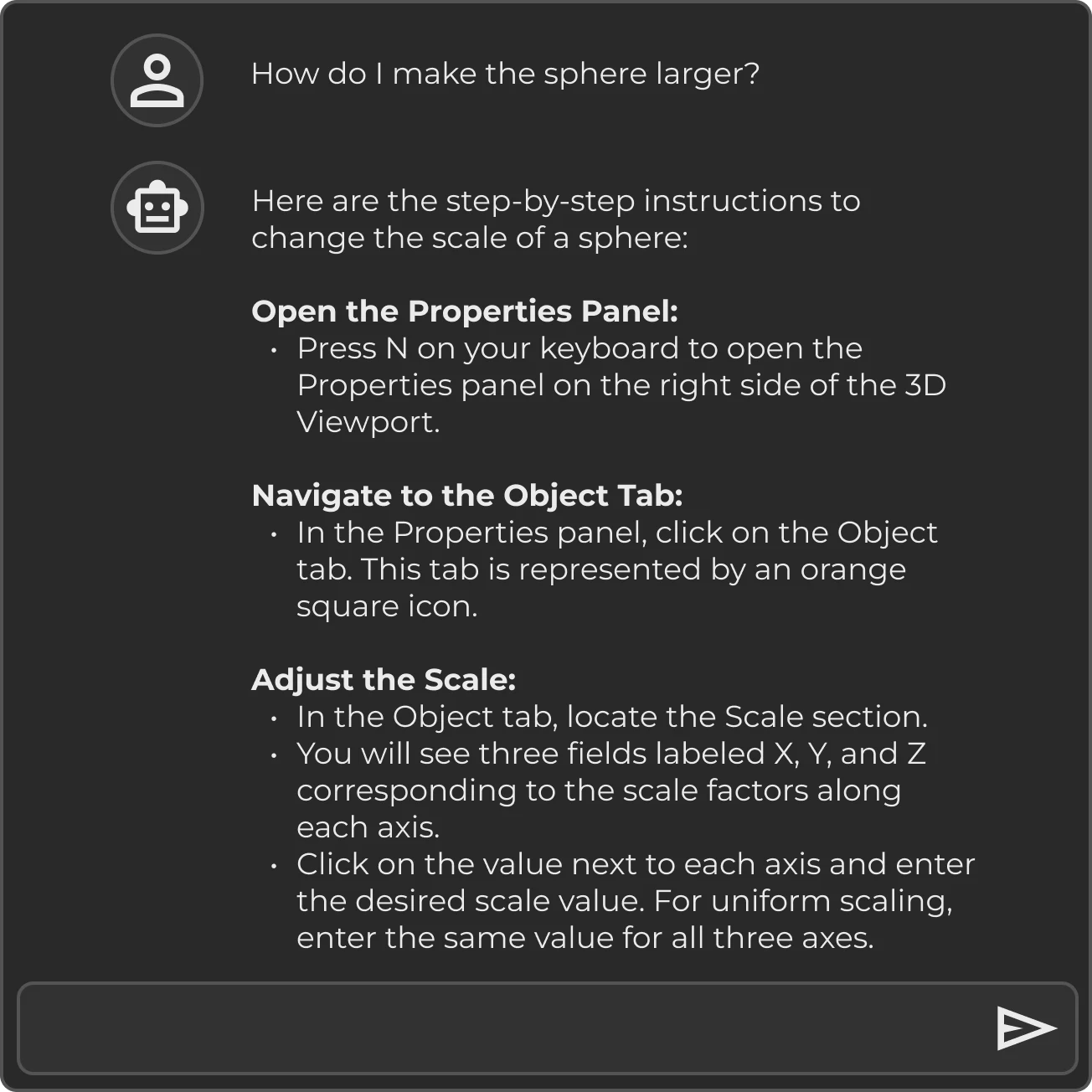

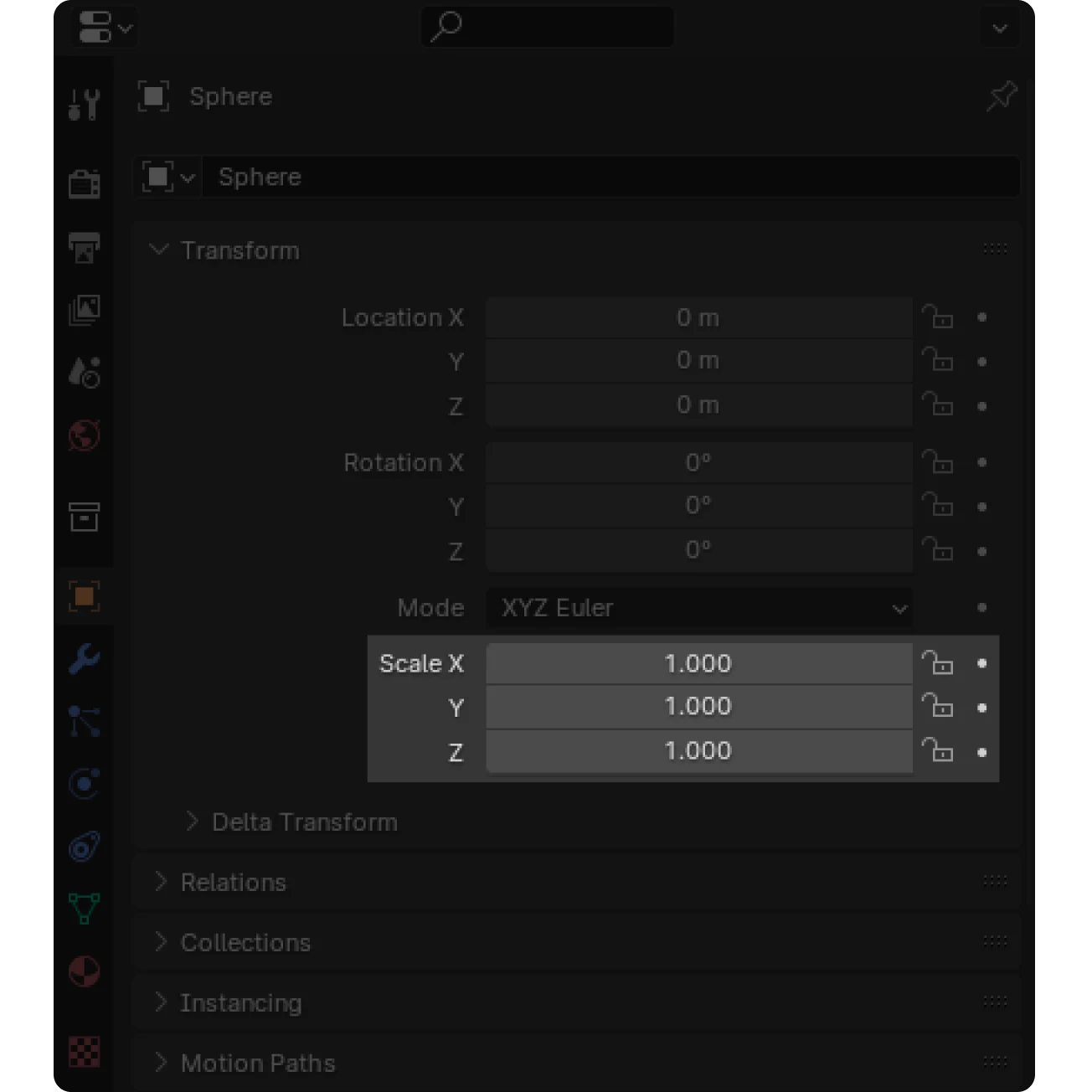

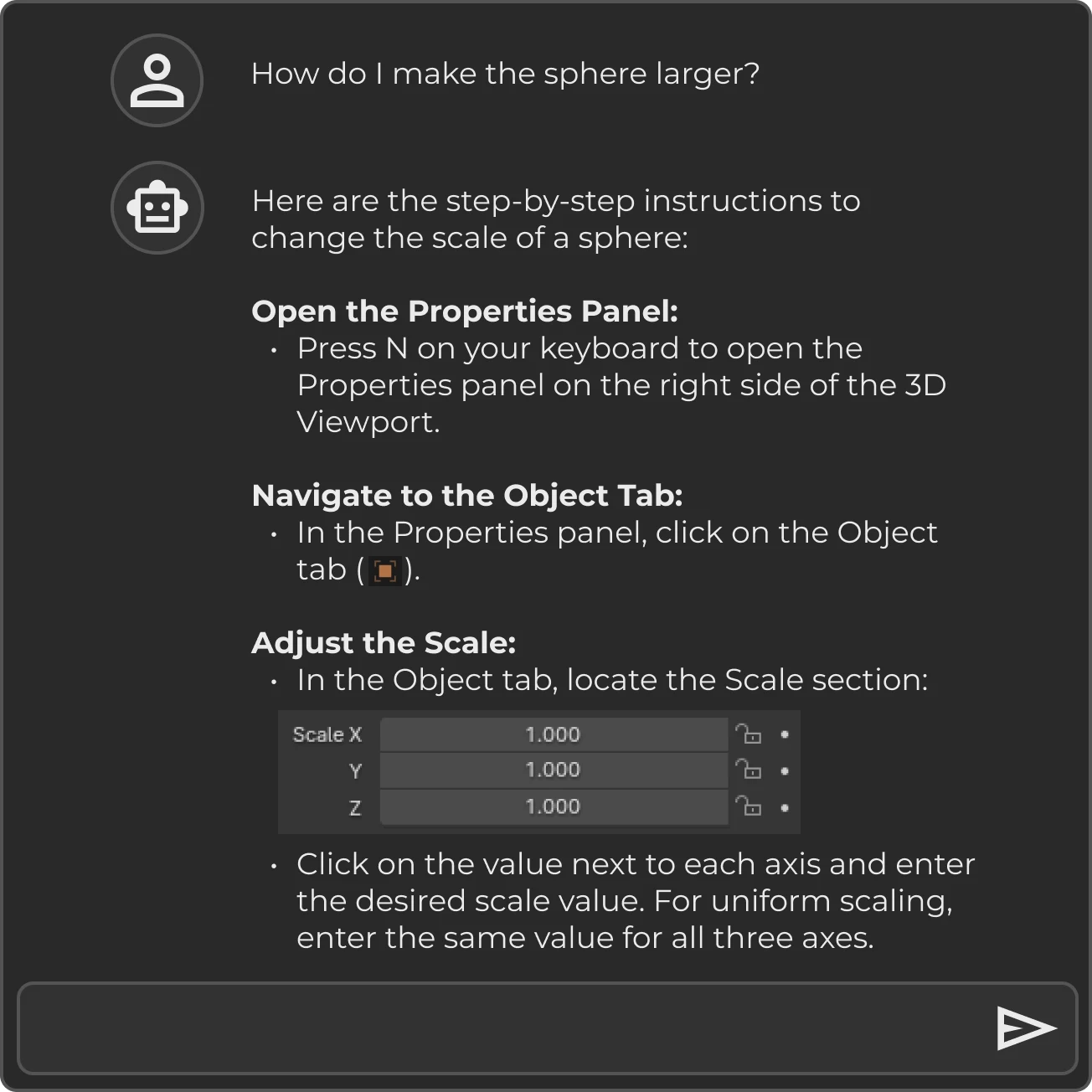

In the early days of generative AI adoption, legacy product vendors have begun to take small steps toward AI integration, often through isolated text-based assistant interfaces. As previously discussed, co-pilots have limited capacity to support users in complex workflows. However, legacy developers need to start somewhere.

Text-based assistants can be especially frustrating in design tools, where users must navigate complex interfaces with many unlabeled icons. Users often struggle to connect the assistant’s descriptions with the application’s UI.

This limitation could be diminished through just-in-time interfaces that re-present visual cues or even replicate the host tool’s interfaces directly within the chatpod. Though the co-pilot strategy is ultimately limited, it can at least be progressively improved upon.

Chat Assistant

Text-based assistant provides in-app help and instructions for using the application with minimal intervention.

Viewport Overlays

Viewport overlays extend the text-based assistant, providing visual indicators to support in-app help and instructions.

Inline Screenshots

Inline screenshots of UI elements enhance the text-based assistant, providing clear visual references to support in-app instructions.

Extracted UI

Replicates functional UI elements within the assistant interface, enabling users to make changes without directly navigating the app UI.

Reformulated UI

Creates new controls for existing attributes within the assistant interface, aligning with the user’s preferred working style rather than app defaults.

Synthetic UI

Restyles the interface based on user preferences and synthesizes attributes, offering more intuitive and streamlined controls, such as unified scaling for multiple axes.

Some technical capabilities can be integrated into design software without radically altering the interaction mechanisms that users already know. Other features, however, would be inconceivable within the current interaction paradigm and require an application-level transformation to become viable.

Under-the-Hood Transformation

Features that can be improved by algorithmic enhancements without altering the application's interfaces and workflows.

Feature-level Transformation

Features that require new but self-contained interfaces and therefore fit into the existing application and workflow, enriching the user experience without broad disruptions.

Application-level Transformation

Features that necessitate new interfaces and a broad rethinking of the interaction paradigm to fully capitalize on the potential advantages brought by the new technology.

One way to help users adopt new features is through an inside-out approach to product transformation.

Many legacy product users don’t want their workflows disrupted but often need to augment their tools through scripting or plugins to achieve particular outcomes. Just-in-time functionality creation bridges this gap by enabling users to describe their needs in simple terms and customize features, add specialized functionality, or tailor tools to their unique workflows – all without requiring technical expertise. By infusing these capabilities into legacy products, users gain a scripting-like ability to evolve tools and workflows at their own pace.

Another way to approach this transition is to view the AI-driven platform as a superset of all legacy applications in the sense that it can emulate their interfaces and behaviors through just-in-time functionality and function-calling.

As such, users could migrate to a tool that looks and behaves identically to the legacy software they already know. Over time, the user can adopt incremental changes or embrace the full transformation when ready.

This approach also benefits the platform developers by enabling the consolidation of codebases into a streamlined implementation stack: significant portions of the system’s implementation work could be outsourced to third-party AI and hardware-acceleration frameworks that power the system’s core AI components.

While incremental approaches can ease migration challenges for users, the most transformative opportunities for AI in design and engineering ultimately require application-level transformation. The ideas discussed in this essay represent not just new features but a fundamentally different methodology – one that integrates AI into the very heart of design and problem-solving processes.

A true paradigm shift cannot be achieved through iterative feature additions alone. To enable users to articulate their ideas more naturally and to allow AI to actively participate in experimentation, refinement, and discovery, we need new software and experience architectures that position AI as a core collaborator and enable entirely new ways of interacting with design material.

For users to fully unlock the potential of these transformative technologies, we must provide them with a completely reimagined frontend experience. Under the hood, this experience can drive agents that interact with conventional APIs and GUI applications running in containers. The frontend, however, should mediate these underlying capabilities and offer an experience that aligns with the user’s proclivities and the nature of the work at hand.

As we move from enhancing individual features to rethinking entire applications, we gain opportunities to define entirely new products and even product categories that differ in whom they serve and how they deliver value:

Existing Products

Integrate AI capabilities into existing tools without disrupting workflows. Enable users to gradually fine-tune how they work by requesting specific augmentations of the tool.

New Products

Create new products with AI-driven JIT features and interfaces, avoiding static workflows and overwhelming menus. Help users focus on tasks and solve problems in an enjoyable, personalized way.

Remix Products

Create pre-curated, domain-specific apps using the JIT architecture. Target new markets and quickly launch solutions to emerging needs by remixing existing functionality for specific use cases.

In addition to feature-level enhancements to Existing Products and comprehensive just-in-time extendable New Products, Remix Products offer a new way to reach both existing and new customers. These products could provide domain-specific functionality for tasks such as car configurators.

Specialized applications open up entirely new avenues for user engagement and problem-solving. These offerings can serve a variety of purposes, such as helping users focus on a specific task or problem, providing quick access to relevant features within the platform, or even simply sparking intellectual curiosity.

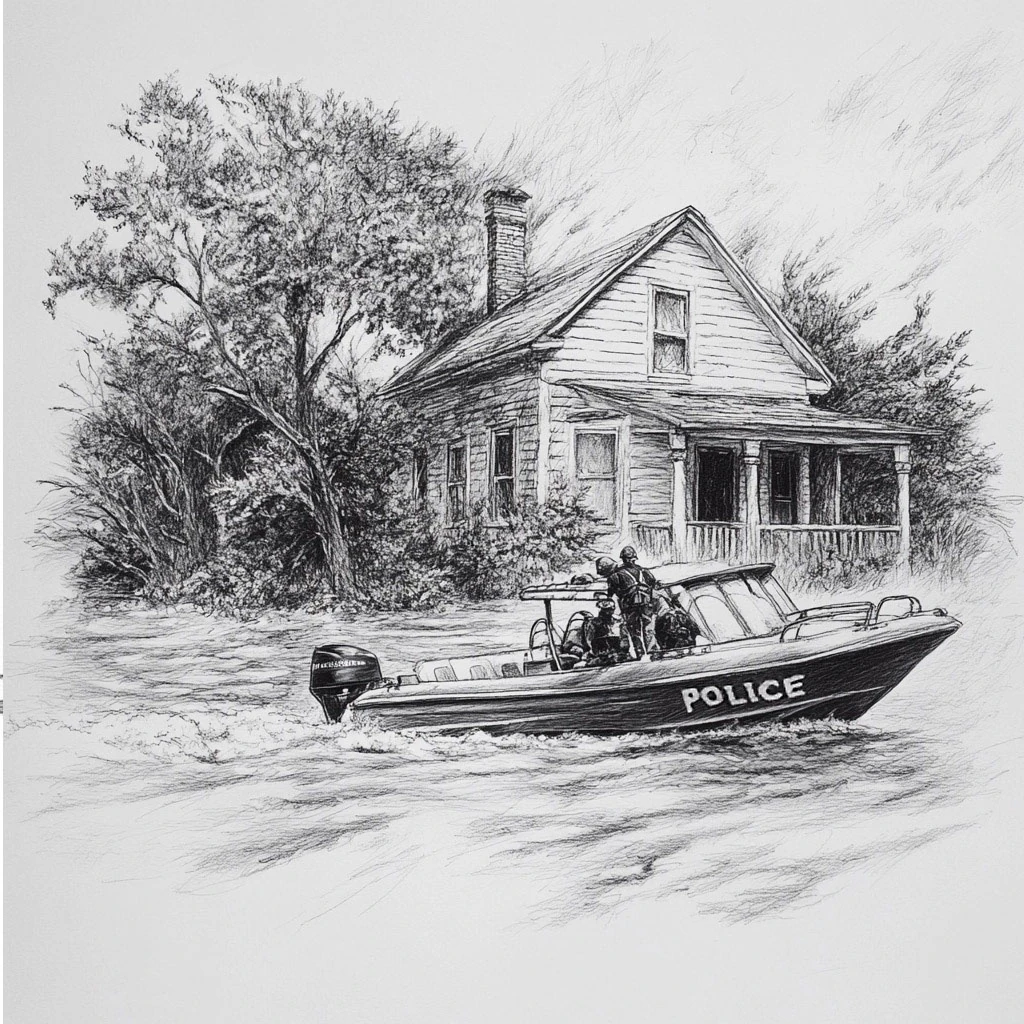

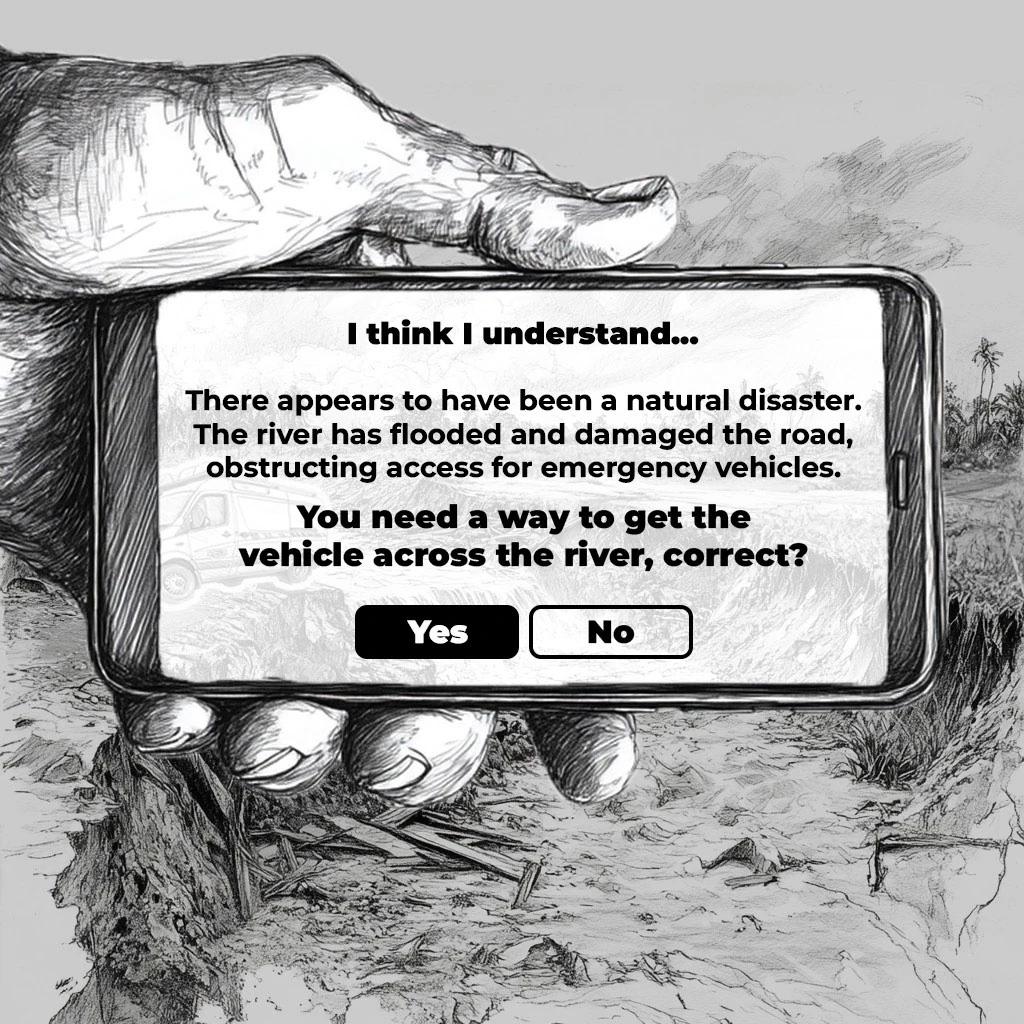

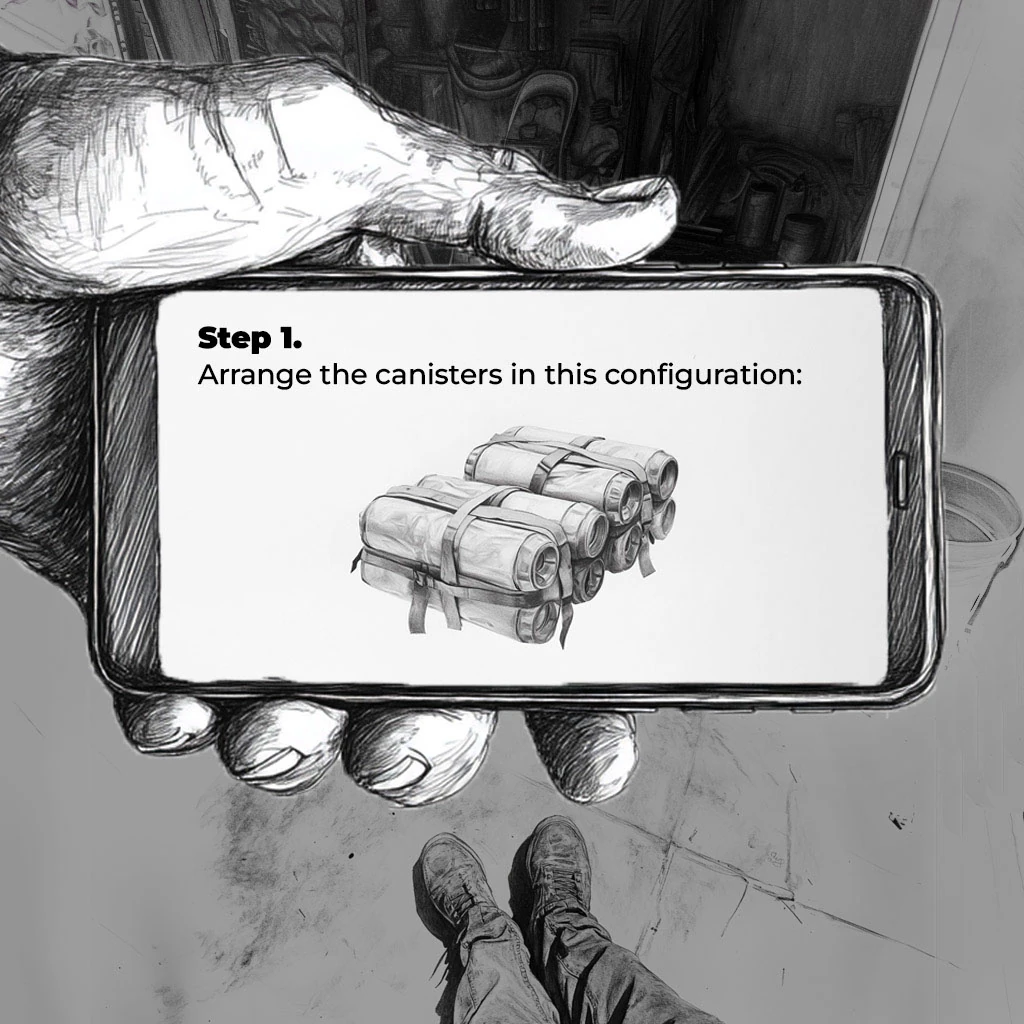

Problem Solver

Enable users to point their phone at a problem for just-in-time instructions or have a solution built and shipped by a manufacturing partner.

SEO Endpoints

Acquire users through search engine results with highly relevant JIT web apps custom-tailored to their needs and use cases.

Public Mindshare

Enrich the public and build goodwill by showing commitment to advancing humanity in AI-driven world.

These targeted applications can also take the form of purpose-built extensions of general-purpose tools, facilitating narrowly focused workflows and distributed collaborations. For example, a dedicated app could enable users to perform a 3D scan of an environment, solicit collective input, or facilitate a Kickstarter-style initiative to crowdsource goals and constraints for a community project.

Auxiliary Interfaces

Enables users to seamlessly bring their work into any context by generating auxiliary apps and interfaces for specialized on-site tasks.

Collective Engagement

Provides users with JIT apps that facilitate collective input, feedback, and decision-making within specific groups.

Collaborative Design

Enables users to collaborate on product design by defining features and providing feedback via a custom-tailored application.

Taken together, these product offerings demonstrate how AI-driven systems can support a far broader range of goals and contexts than traditional software ever could. By moving beyond static feature sets and entrenched workflows, we create a vibrant ecosystem of tools and services that align with our rapidly evolving world.

Given the many ways in which AI is poised to reshape who can participate in design and engineering work, how they collaborate with their tools, and which tasks those tools can handle autonomously, it stands to reason that a substantial reenvisioning of the business models associated with these tools will also be necessary.

A key factor that suggests the need for new business models is that within this AI-driven system, users will be able to choose how much effort and resources they wish to allocate to agents’ efforts. A modest project might require minimal exploration, while a more ambitious or mission-critical endeavor could justify a significantly greater volume of experimentation and development work. With this variability, boxed software or even flat subscription models are no longer practical. Instead, aligning costs either to the effort invested or the value delivered would be more sensible.

AI’s capacity to play a meaningful role in problem-solving and execution broadens the addressable market and the range of outcomes the system can deliver. Rather than merely responding to user requests, the proposed agentic system can identify promising ventures, propose novel solutions, or pursue humanitarian goals. This opens new opportunities for direct value creation – shifting the focus from selling software as a tool to delivering outcomes.

With these new dynamics, it seems sensible to draw inspiration from consulting models used in client-service industries, such as Agile and Waterfall. Much like Agile development, a Pay-by-Compute model offers users flexibility to iterate and experiment, controlling costs based on usage but without a guaranteed outcome. In contrast, a Pay-by-Solution model, akin to Waterfall, shifts the risk to the provider, who commits to delivering a specific outcome for a fixed price. A Direct-to-Consumer model positions software producers as vertically integrated entities, leveraging AI to identify opportunities, develop solutions, and deliver products directly to end users, bypassing traditional intermediaries and creating a self-contained pipeline that redefines how value is generated and captured.

Pay-by-Compute

Platform Model

A scalable team of geniuses ready to build the future.

Access as much or little innovation as the customer needs.

Pay-by-Solution

Service Model

Amazon for things that haven’t been invented yet.

Novel solutions to real problems, delivered right to your door.

Direct-to-Consumer

Vertical Model

A self-sufficient product company run by machines.

AI spots opportunities, develops + markets products.

AI can drive meaningful change through practical applications in functional design and engineering. From tackling climate challenges to enhancing everyday conveniences, AI empowers innovation that can genuinely improve people’s lives.

Our goals for a world-changing technology should not be meager. Yet we cannot simply tackle the most critical, cutting-edge problems at the outset. We need a technical strategy that builds from simpler challenges toward increasingly complex ones, allowing the system to accrue capabilities organically.

AI agents can guide this progression by reasoning about which problem to tackle next, weighing the potential real-world impact of a solution against the effort required to extend existing capabilities.

To reason effectively about these choices, we need a value function that formalizes the trade-offs involved in determining which problems to tackle. This function should balance the potential impact of solving a problem against the effort required to do so, while also considering how a solution might enhance the system’s ability to take on more complex challenges in the future. By structuring decisions in this way, the system can prioritize actions that not only achieve meaningful outcomes but also pave the way for continued growth.

These considerations can be expressed through an equation like: $$ V = \frac{(EV \times SGF) + AF}{E + 1} $$

Where:

| Expected Value (Risk-Adjusted): Potential real-world benefit of problem's solution | |

| Effort (Risk-Adjusted): Resource demands of current capabilities | |

| Strategic Growth Factor: Impact on future problem-solving capabilities | |

| Alignment Factor: Degree of alignment with human needs and values |

The iterative process described above will lead to an “innovation flywheel” in which each success builds on those before it, lowering barriers to more ambitious goals and compounding the system’s capacity to tackle increasingly diverse and demanding challenges. At the same time, human input and oversight will ensure that the system stays aligned with human needs and priorities.

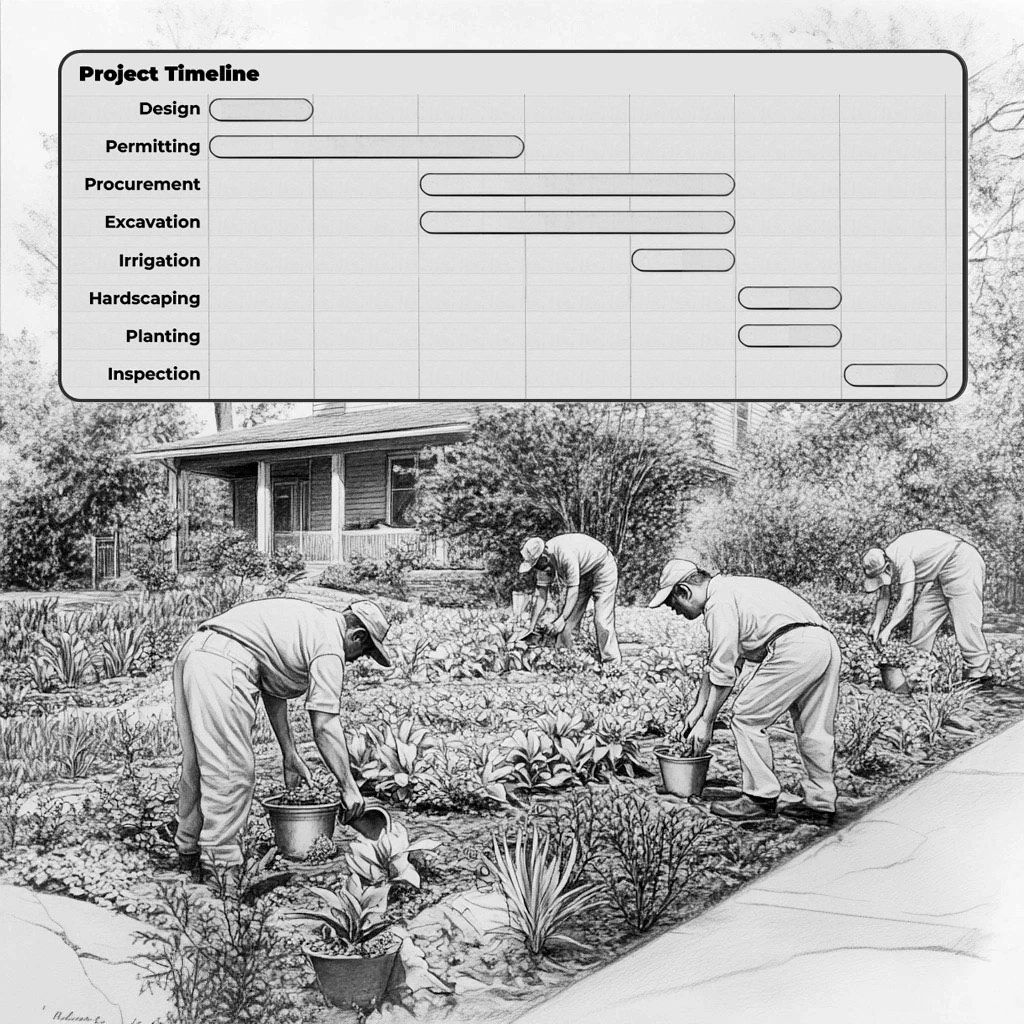

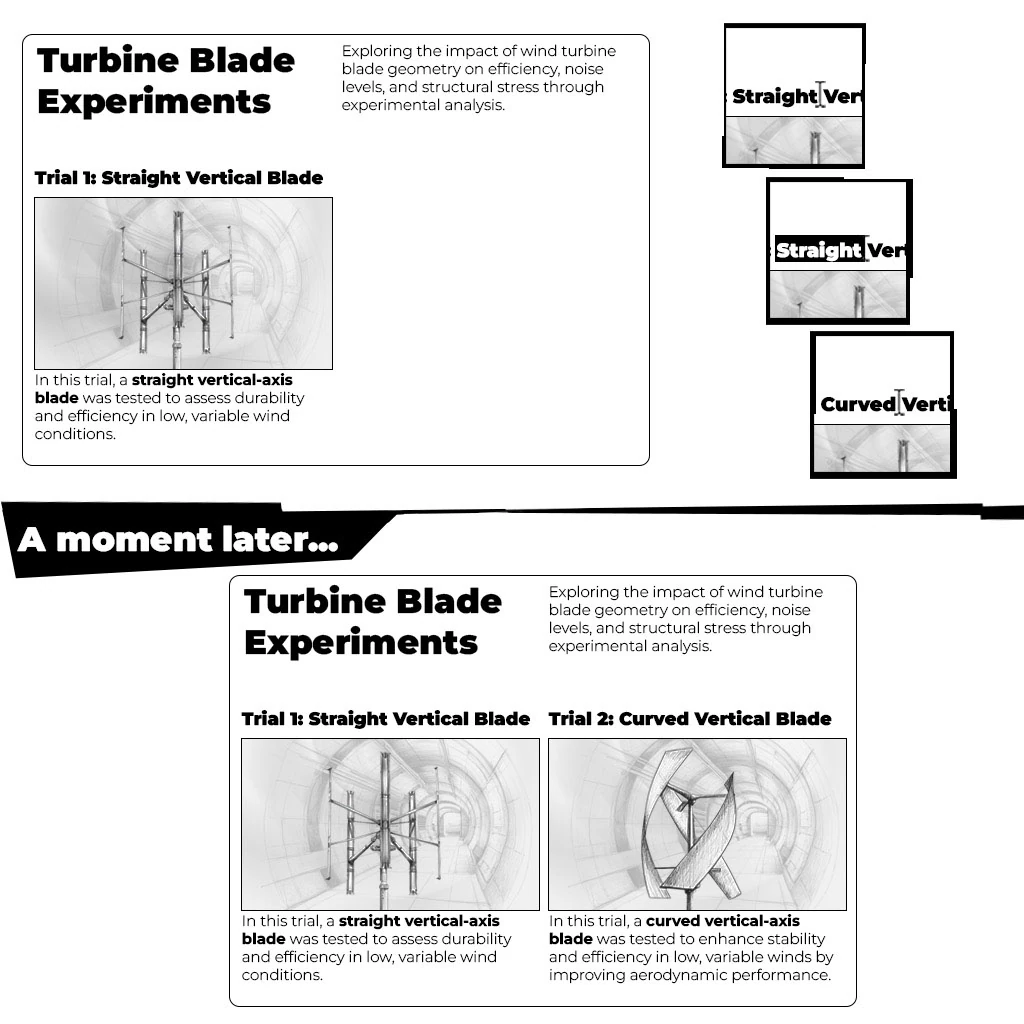

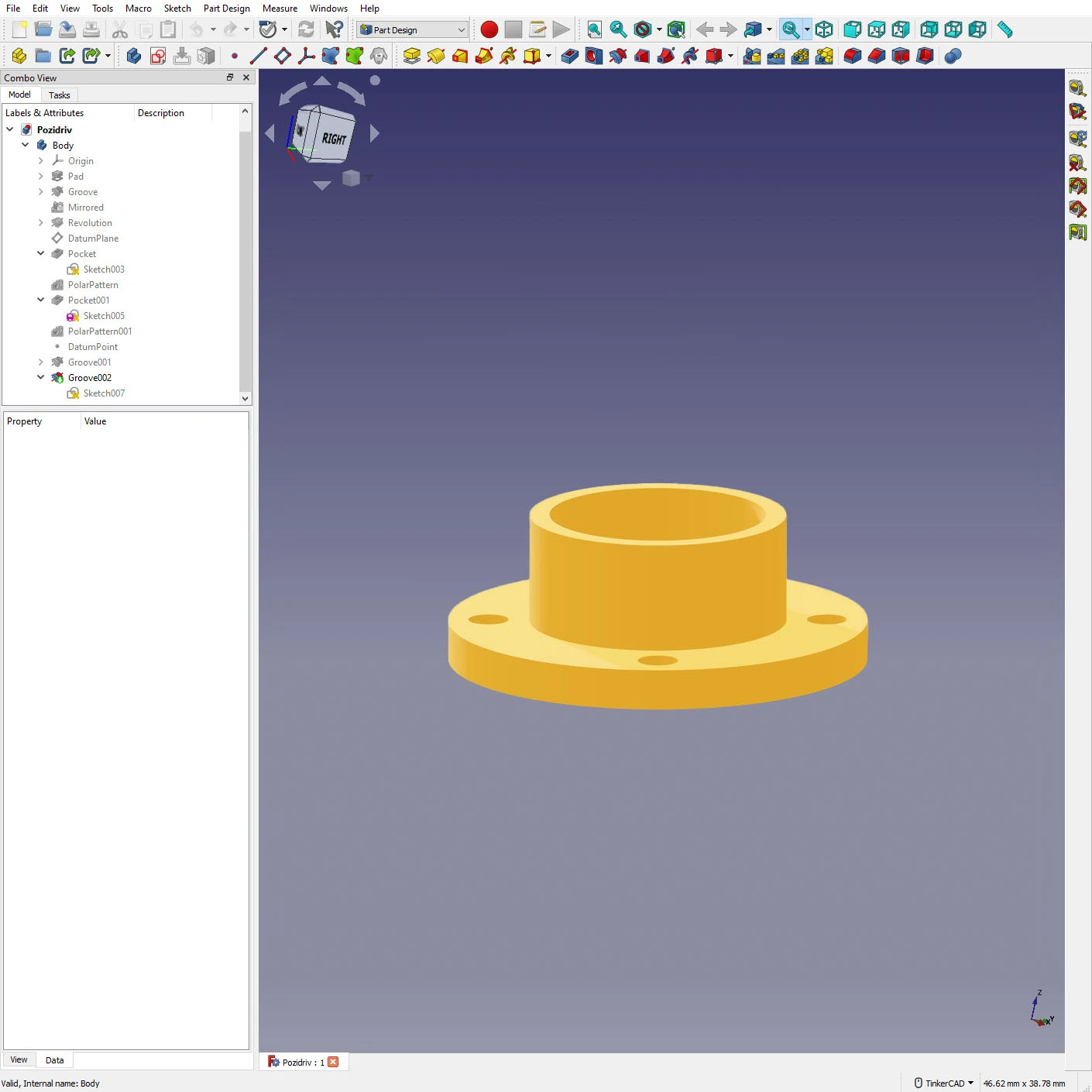

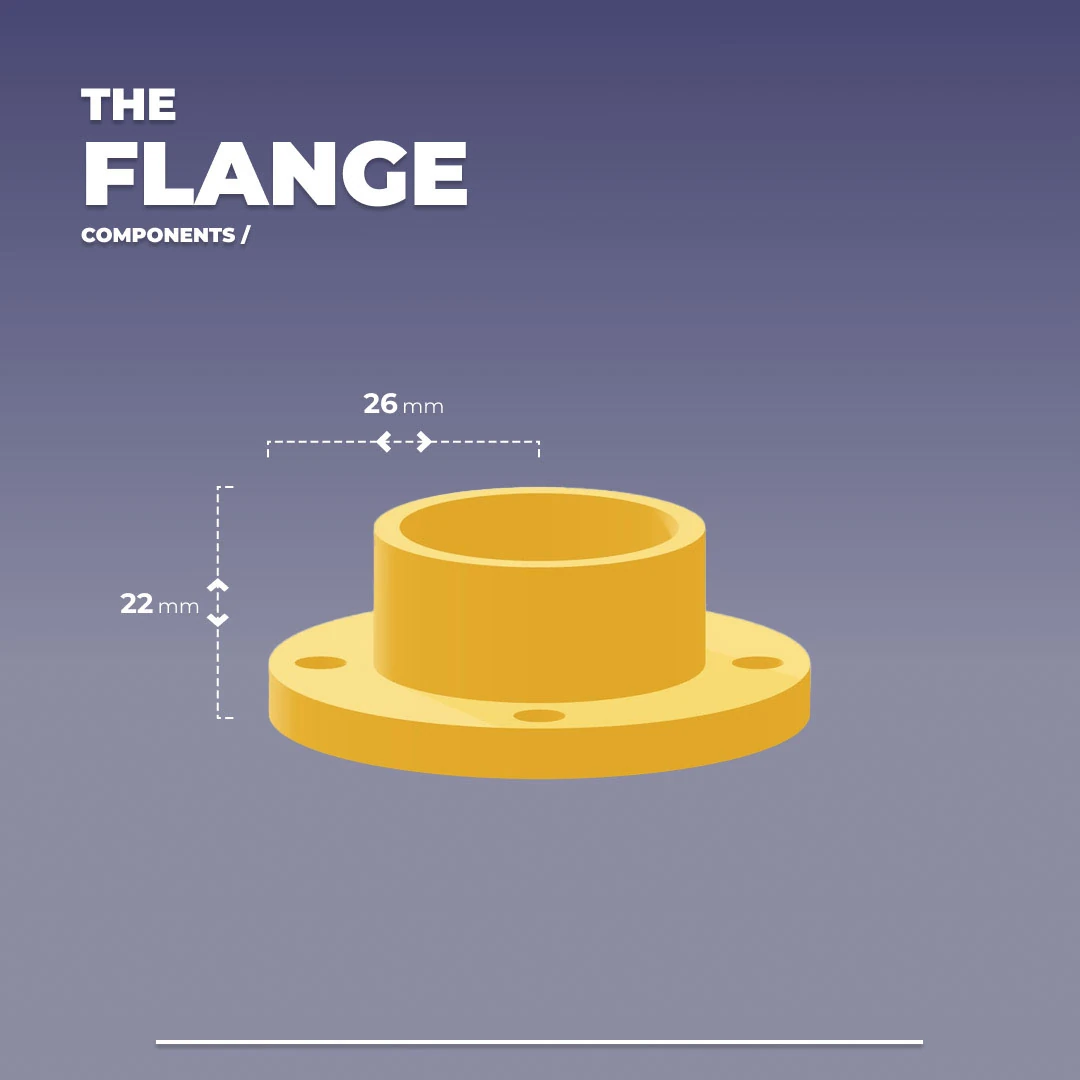

The following examples demonstrate how complexity can be scaffolded in a product design context. Beginning with static objects, then incorporating dynamic elements, and finally integrating multiple subsystems, these examples show how increasingly sophisticated challenges can be addressed progressively:

Static Object

A foundational design focusing on static structure and material properties. This establishes principles like durability, manufacturability, and ergonomics.

Dynamic Mechanism

Adds simple mechanical elements introducing motion and force transfer. Builds on static design by requiring considerations like stress, tolerances, and moving parts.

Integrated System

Combines powered mechanical components, electronics, and a UI. Requires coordinating complex interactions between subsystems.

Over time, this scaffolded approach will yield a flexible, adaptive set of capabilities equipped to address the world’s most critical challenges. By prioritizing adaptability, emergent problem selection, and incremental growth over predefined roadmaps and architectures, the system will evolve into a powerful engine for transformative change that can proactively identify unmet needs and emerging opportunities for positive change across diverse domains, ranging from addressing market gaps in the business world to tackling critical humanitarian crises and environmental challenges.

Here are just some of the many areas in which this proactive system could drive real-world innovation:

We stand at a pivotal moment. We have the foundational technologies needed to address pressing global challenges like aging infrastructure and environmental degradation, while also improving daily life through better products, homes, and workplaces. But there is more to be done, and ensuring these technologies lead to worthwhile outcomes won’t happen without the right people.

If you share my passion for enacting this transformation, I would love to connect with you.

You can reach me at impact@patrickhebron.com

For Rue and our learning machines, Lucian and Sabrina, who inspire me to work toward a better future.

Thanks to Kent Oberheu, Robin Debreuil, Nikhil Tailang, Tims Gardner, Michael Schrage, Samim Winiger, Philippe Cailloux, Tobias Rees and Kush Amerasinghe for their insights, support, and mentorship.

© Patrick Hebron, 2025. All Rights Reserved.